Windows Server Failover Clustering (WSFC) — a feature of Microsoft Windows Server operating system for fault tolerance and high availability (HA) of applications and services — enables several computers to host a service, and if one has a fault, the remaining computers automatically take over the hosting of the service. It is included with Windows Server 2022, Windows Server 2019, Windows Server 2016 and Azure Stack HCI.

In WSFC, each individual server is called a node. The nodes can be physical computers or virtual machines, and are connected through physical connections and through software. Two or more nodes are combined to form a cluster, which hosts the service. The cluster and nodes are constantly monitored for faults. If a fault is detected, the nodes with issues are removed from the cluster and the services may be restarted or moved to another node.

Capabilities of Windows Server Failover Clustering (WSFC)

Windows Server Failover Cluster performs several functions, including:

- Unified cluster management. The configuration of the cluster and service is stored on each node within the cluster. Changes to the configuration of the service or cluster are automatically sent to each node. This allows for a single update to change the configuration on all participating nodes.

- Resource management. Each node in the cluster may have access to resources such as networking and storage. These resources can be shared by the hosted application to increase the cluster performance beyond what a single node can accomplish. The application can be configured to have startup dependencies on these resources. The nodes can work together to ensure resource consistency.

- Health monitoring. The health of each node and the overall cluster is monitored. Each node uses heartbeat and service notifications to determine health. The cluster health is voted on by the quorum of participating nodes.

- Automatic and manual failover. Resources have a primary node and one or more secondary nodes. If the primary node fails a health check or is manually triggered, ownership and use of the resource is transferred to the secondary node. Nodes and the hosted application are notified of the failover. This provides fault tolerance and allows rolling updates not to affect overall service health.

Common applications that use WSFC

A number of different applications can use WSFC, including:

- Database Server

- Windows Distributed File System (NFS) Namespace Server

- File Server

- Hyper-V

- Microsoft Exchange Server

- Microsoft SQL Server

- Namespace Server

- Windows Internet Name Server

WSFC voting, quorum and witnesses

Every cluster network must account for the possibility of individual nodes losing communication to the cluster but still being able to serve requests or access resources. If this were to happen, the service could become corrupt and serve bad responses or cause data stores to become out of sync. This is known as split-brain condition.

WSFC uses a voting system with quorum to determine failover and to prevent a split-brain condition. In the cluster, the quorum is defined as half of the total nodes. After a fault, the nodes vote to stay online. If less than the quorum amount votes yes, those nodes are removed. For example, a cluster of five nodes has a fault, causing three to stay in communication in one segment and two in the other. The group of three will have the quorum and stay online, while the other two will not have a quorum and will go offline.

In small clusters, an extra witness vote should be added. The witness is an extra vote that is added as a tiebreaker in clusters with even numbers of nodes. Without a witness, if half of the nodes go offline at one time the whole service is stopped. A witness is required in clusters with only two nodes and recommended for three and four node clusters. In clusters of five or more nodes, a witness does not provide benefits and is not needed. The witness information is stored in a witness.log file. It can be hosted as a File Share Witness, an Azure Cloud Witness or as a Disk Witness (aka custom quorum disk).

A dynamic quorum allows the number of votes to constitute a quorum to adjust as faults occur. This way, as long as more than half of the nodes don’t go offline at one time, the cluster will be able to continuously lose nodes without it going offline. This allows for a single node to run the services as the «last man standing.»

Windows Server Failover Clustering and Microsoft SQL Server Always On

SQL Server Always On is a high-availability and disaster recovery product for Microsoft SQL server that takes advantage of WSFC. SQL Server Always On has two configurations that can be used separately or in tandem. Failover Cluster Instance (FCI) is a SQL Server instance that is installed across several nodes in a WSFC. Availability Group (AG) is a one or more databases that fail over together to replicated copies. Both register components with WSFC as cluster resources.

Windows Server Failover Clustering Setup Steps

See Microsoft for full documentation on how to deploy a failover cluster using WSFC.

- Verify prerequisites

- All nodes on same Windows Server version

- All nodes using supported hardware

- All nodes are members of the same Active Directory domain

- Install the Failover Clustering feature using Windows Server Manager add Roles and Features

- Validate the failover cluster configuration

- Create the failover cluster in server manager

- Create the cluster roles and services using Microsoft Failover Cluster Manager (MSFCM)

See failover cluster quorum considerations for Windows admins, 10 top tips to maximize hyper-converged infrastructure benefits and how to build a Hyper-V home lab in Windows Server 2019.

This was last updated in March 2022

Continue Reading About Windows Server Failover Clustering (WSFC)

- How does a Hyper-V failover cluster work behind the scenes?

- Manage Windows Server HCI with Windows Admin Center

- Guest clustering achieves high availability at the VM level

- 5 skills every Hyper-V administrator needs to succeed

- How does a Hyper-V failover cluster work behind the scenes?

Dig Deeper on IT operations and infrastructure management

-

Microsoft Exchange Server

By: Nick Barney

-

Microsoft Cloud Witness

By: Katie Terrell Hanna

-

failover cluster

By: Rahul Awati

-

cluster quorum disk

By: Andrew Zola

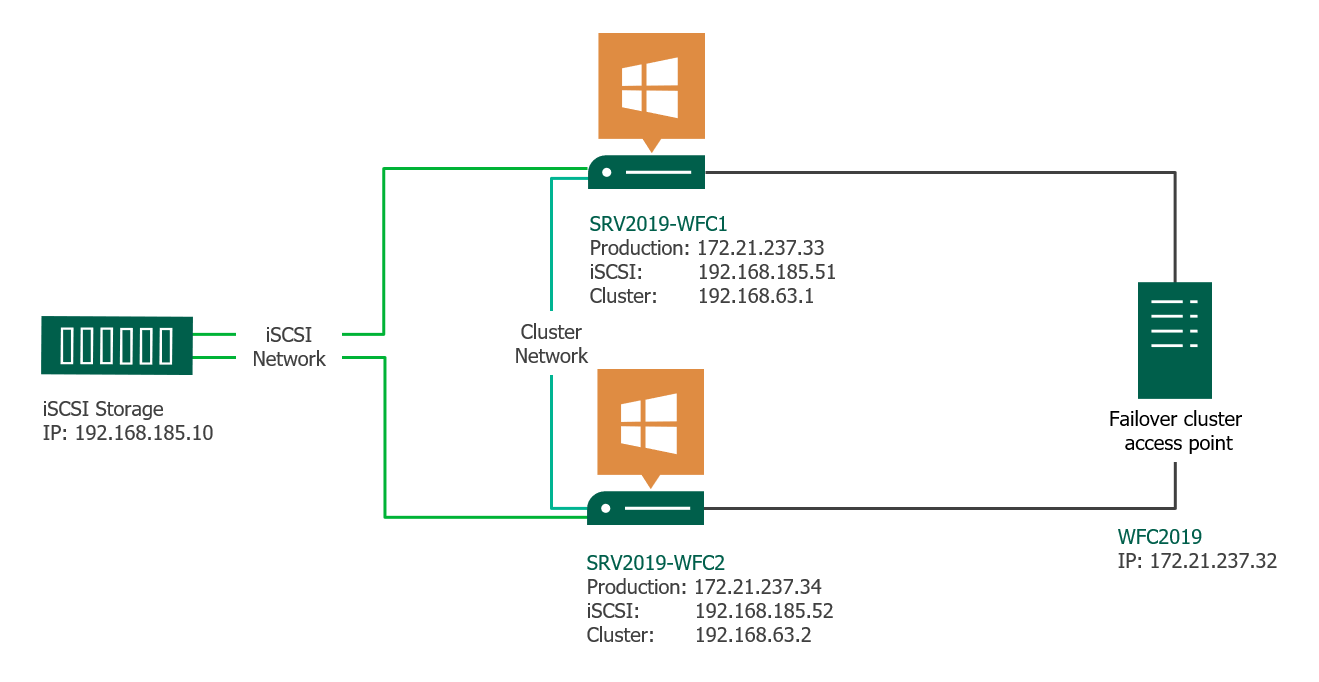

This article gives a short overview of how to create a Microsoft Windows Failover Cluster (WFC) with Windows Server 2019 or 2016. The result will be a two-node cluster with one shared disk and a cluster compute resource (computer object in Active Directory).

Preparation

It does not matter whether you use physical or virtual machines, just make sure your technology is suitable for Windows clusters. Before you start, make sure you meet the following prerequisites:

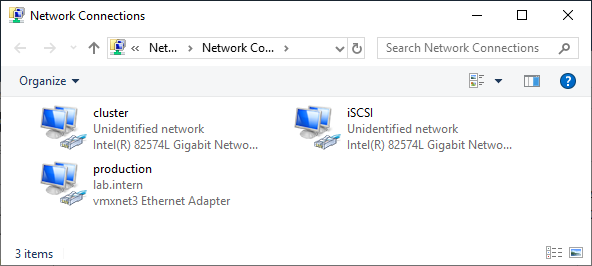

Two Windows 2019 machines with the latest updates installed. The machines have at least two network interfaces: one for production traffic, one for cluster traffic. In my example, there are three network interfaces (one additional for iSCSI traffic). I prefer static IP addresses, but you can also use DHCP.

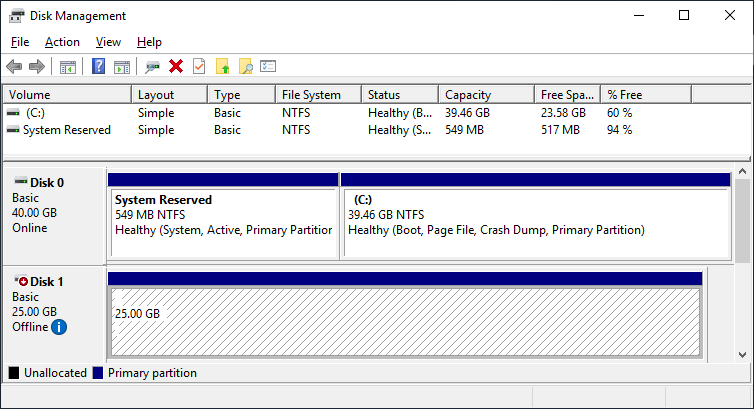

Join both servers to your Microsoft Active Directory domain and make sure that both servers see the shared storage device available in disk management. Don’t bring the disk online yet.

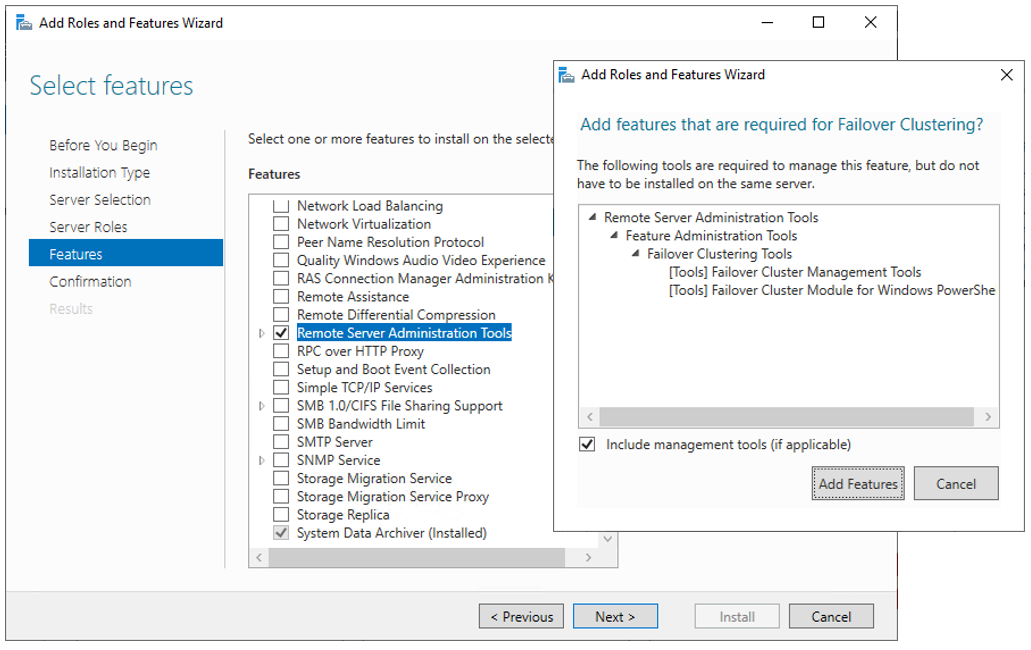

The next step before we can really start is to add the Failover clustering feature (Server Manager > add roles and features).

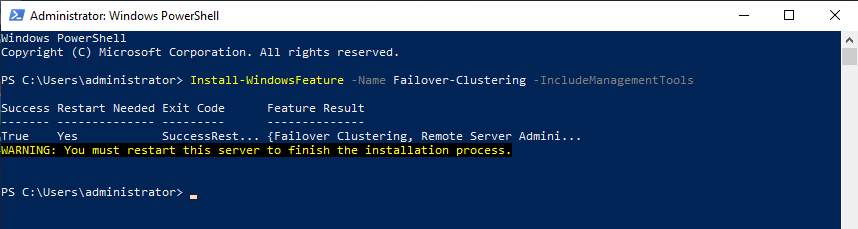

Reboot your server if required. As an alternative, you can also use the following PowerShell command:

Install-WindowsFeature -Name Failover-Clustering –IncludeManagementTools

After a successful installation, the Failover Cluster Manager appears in the start menu in the Windows Administrative Tools.

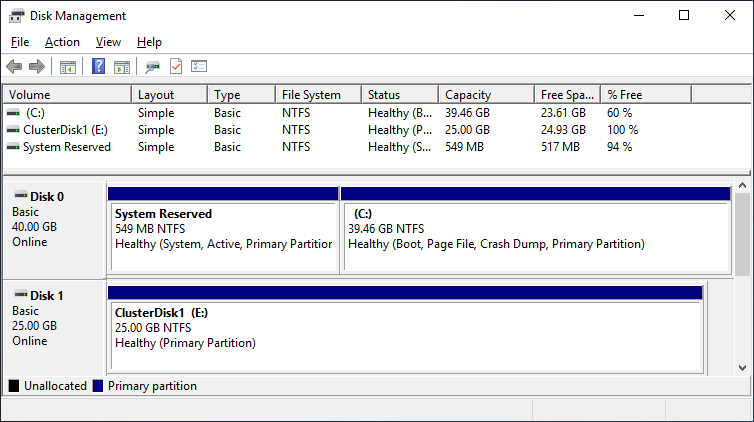

After you installed the Failover-Clustering feature, you can bring the shared disk online and format it on one of the servers. Don’t change anything on the second server. On the second server, the disk stays offline.

After a refresh of the disk management, you can see something similar to this:

Server 1 Disk Management (disk status online)

Server 2 Disk Management (disk status offline)

Failover Cluster readiness check

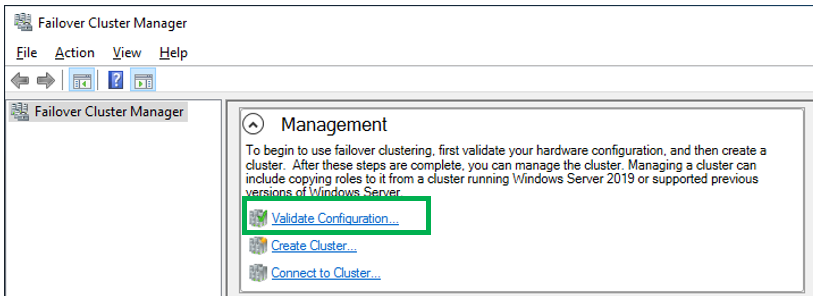

Before we create the cluster, we need to make sure that everything is set up properly. Start the Failover Cluster Manager from the start menu and scroll down to the management section and click Validate Configuration.

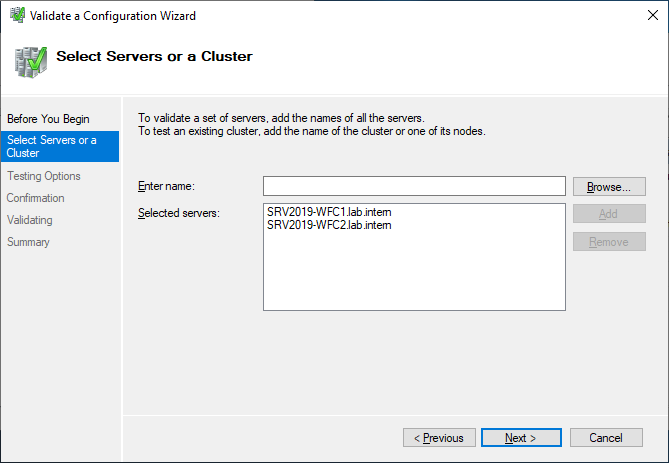

Select the two servers for validation.

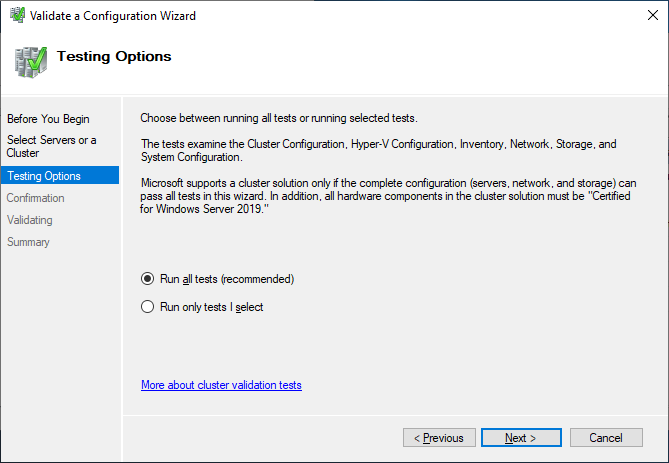

Run all tests. There is also a description of which solutions Microsoft supports.

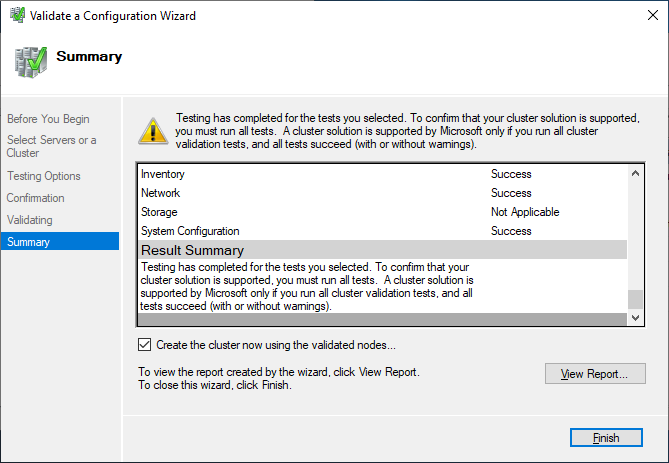

After you made sure that every applicable test passed with the status “successful,” you can create the cluster by using the checkbox Create the cluster now using the validated nodes, or you can do that later. If you have errors or warnings, you can use the detailed report by clicking on View Report.

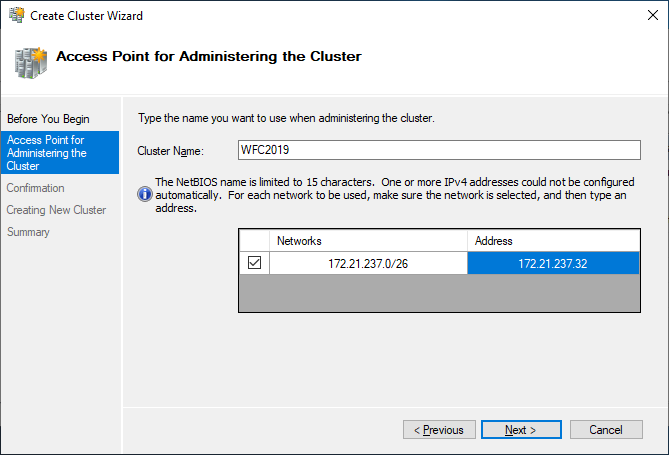

If you choose to create the cluster by clicking on Create Cluster in the Failover Cluster Manager, you will be prompted again to select the cluster nodes. If you use the Create the cluster now using the validated nodes checkbox from the cluster validation wizard, then you will skip that step. The next relevant step is to create the Access Point for Administering the Cluster. This will be the virtual object that clients will communicate with later. It is a computer object in Active Directory.

The wizard asks for the Cluster Name and IP address configuration.

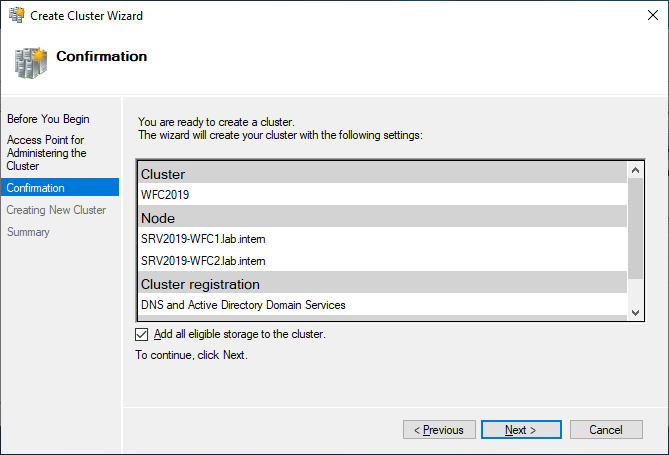

As a last step, confirm everything and wait for the cluster to be created.

The wizard will add the shared disk automatically to the cluster per default. If you did not configure it yet, then it is also possible afterwards.

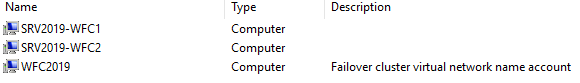

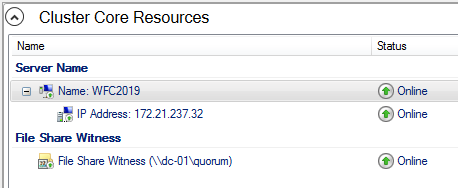

As a result, you can see a new Active Directory computer object named WFC2019.

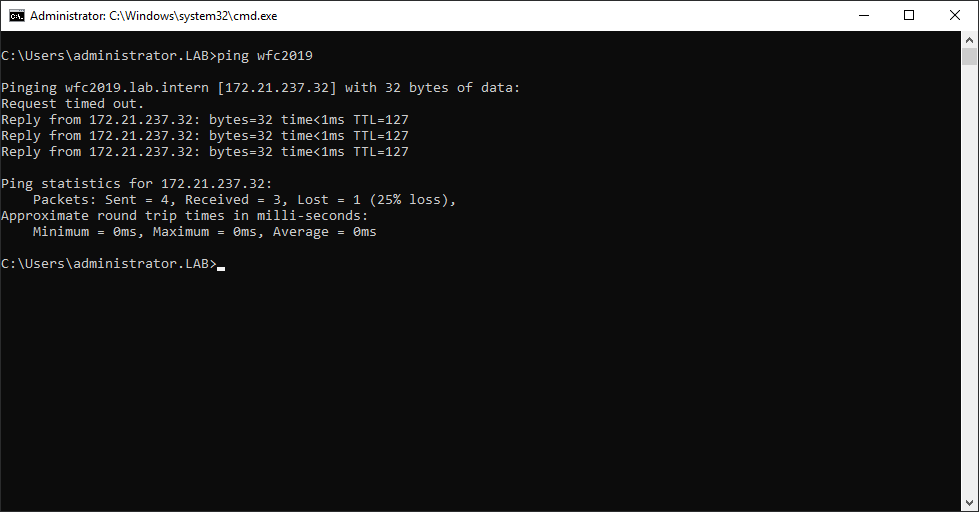

You can ping the new computer to check whether it is online (if you allow ping on the Windows firewall).

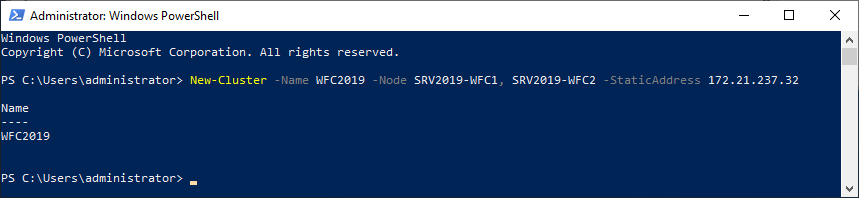

As an alternative, you can create the cluster also with PowerShell. The following command will also add all eligible storage automatically:

New-Cluster -Name WFC2019 -Node SRV2019-WFC1, SRV2019-WFC2 -StaticAddress 172.21.237.32

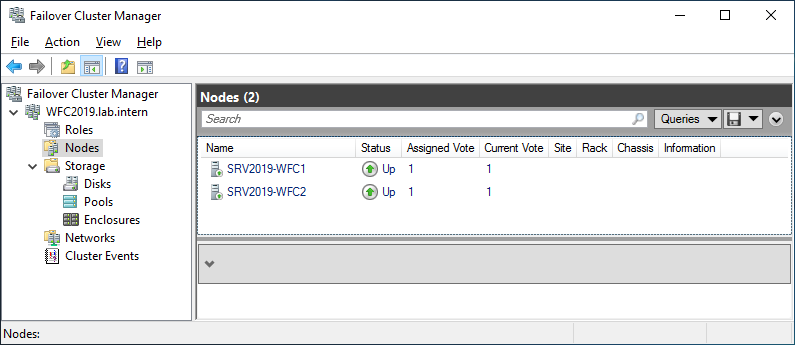

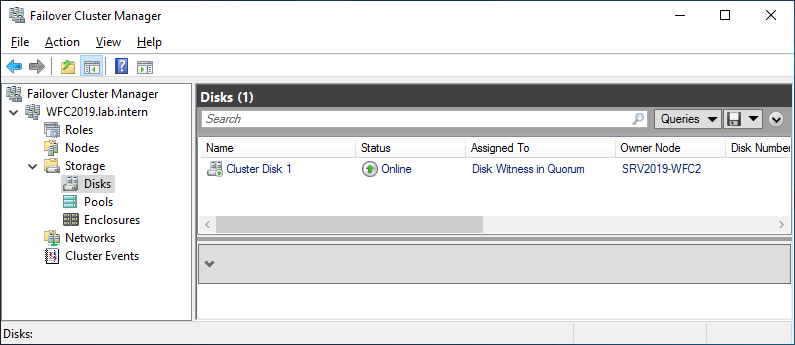

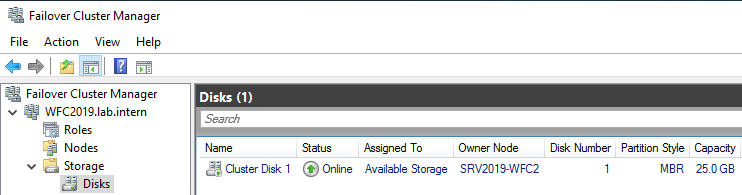

You can see the result in the Failover Cluster Manager in the Nodes and Storage > Disks sections.

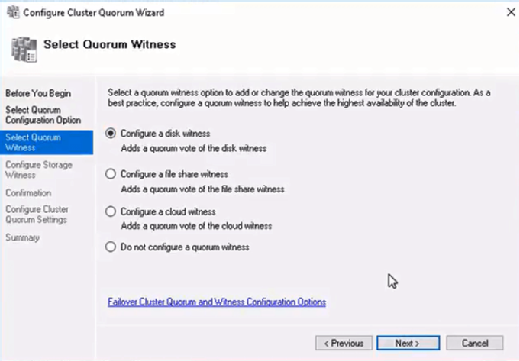

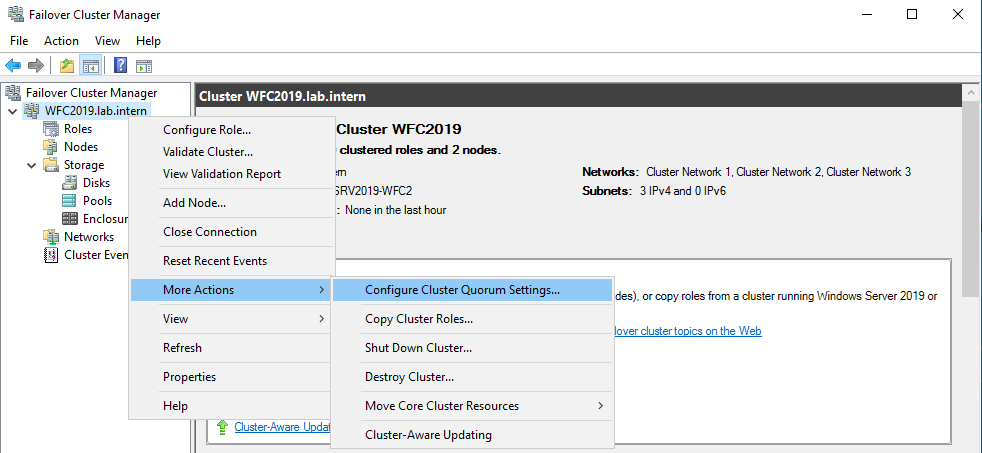

The picture shows that the disk is currently used as a quorum. As we want to use that disk for data, we need to configure the quorum manually. From the cluster context menu, choose More Actions > Configure Cluster Quorum Settings.

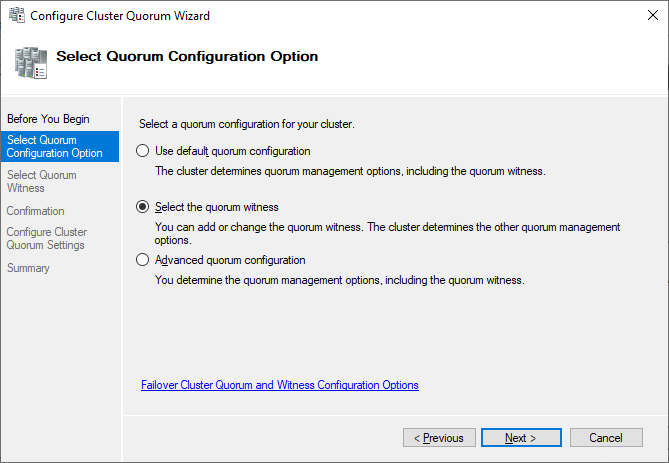

Here, we want to select the quorum witness manually.

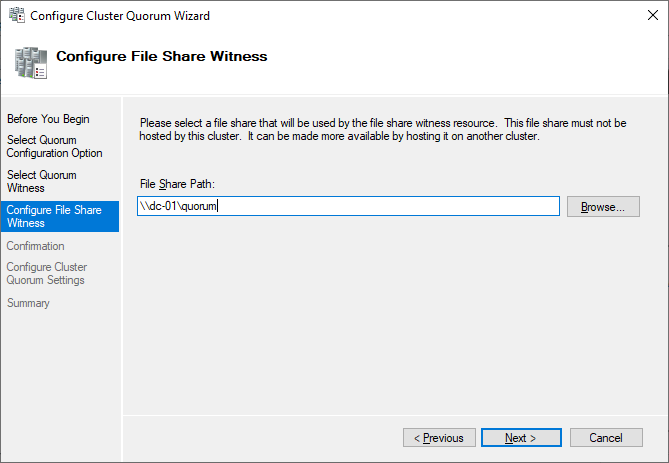

Currently, the cluster is using the disk configured earlier as a disk witness. Alternative options are the file share witness or an Azure storage account as witness. We will use the file share witness in this example. There is a step-by-step how-to on the Microsoft website for the cloud witness. I always recommend configuring a quorum witness for proper operations. So, the last option is not really an option for production.

Just point to the path and finish the wizard.

After that, the shared disk is available for use for data.

Congratulations, you have set up a Microsoft failover cluster with one shared disk.

Next steps and backup

One of the next steps would be to add a role to the cluster, which is out of scope of this article. As soon as the cluster contains data, it is also time to think about backing up the cluster. Veeam Agent for Microsoft Windows can back up Windows failover clusters with shared disks. We also recommend doing backups of the “entire system” of the cluster. This also backs up the operating systems of the cluster members. This helps to speed up restore of a failed cluster node, as you don’t need to search for drivers, etc. in case of a restore.

See More:

- On-Demand Sessions from VeeamON Virtual

ServerWatch content and product recommendations are editorially independent. We may make money when you click on links to our partners. Learn More.

A failover cluster is a set of computer servers that work together to provide either high availability (HA) or continuous availability (CA). If one of the servers goes down, another node in the cluster can assume its workload with either minimum or no downtime through a process referred to as failover.

Some failover clusters use physical servers only, whereas others involve virtual machines (VMs).

The main purpose of a failover cluster is to provide either CA or HA for applications and services. Also referred to as fault tolerant (FT) clusters, CA clusters allow end users to keep utilizing applications and services without experiencing any timeouts if a server fails. HA clusters might cause a brief interruption in service for customers, but the system will recover automatically with no data loss and minimum downtime.

A cluster is made up of two or more nodes—or servers—that transfer data and software to process the data through physical cables or a dedicated secure network. Other kinds of clustering technology can be used for load balancing, storage, and concurrent or parallel processing. Some implementations combine failover clusters with additional clustering technology.

To protect your data, a dedicated network connects the failover cluster nodes, providing essential CA or HA backup.

How Failover Clusters Work

While CA failover clusters are designed for 100 percent availability, HA clusters attempt 99.999 percent availability—also known as “five nines.” This downtime amounts to no more than 5.26 minutes yearly. CA clusters offer greater availability, but they require more hardware to run, which increases their overall cost.

High Availability Failover Clusters

In a high availability cluster, groups of independent servers share resources and data throughout the system. All nodes in a failover cluster have access to shared storage. High availability clusters also include a monitoring connection that servers use to check the “heartbeat” or health of the other servers. At any time, a least one of the nodes in a cluster is active, while at least one is passive.

In a simple two-node configuration, for example, if Node 1 fails, Node 2 uses the heartbeat connection to recognize the failure and then configures itself as the active node. Clustering software installed on every node in the cluster makes sure that clients connect to an active node.

Larger configurations may use dedicated servers to perform cluster management. A cluster management server constantly sends out heartbeat signals to determine if any of the nodes are failing, and if so, to direct another node to assume the load.

Some cluster management software provides HA for virtual machines (VMs) by pooling the machines and the physical servers they reside on into a cluster. If failure occurs, the VMs on the failed host are restarted on alternate hosts.

Shared storage does pose a risk as a potential single point of failure. However, the use of RAID 6 together with RAID 10 can help to ensure that service will continue even if two hard drives fail.

If all servers are plugged into the same power grid, electrical power can represent another single point of failure. The nodes can be safeguarded by equipping each with a separate uninterruptible power supply (UPS).

Continuous Availability Failover Clusters

In contrast to the HA model, a fault-tolerant cluster consists of multiple systems that share a single copy of a computer’s OS. Software commands issued by one system are also executed on the other systems.

CA requires the organization to use formatted computer equipment and a secondary UPS. In a CA failover cluster, the operating system (OS) has an interface where a software programmer can check critical data at predetermined points in a transaction. CA can only be achieved by using a continuously available and nearly exact copy of a physical or virtual machine running the service. This redundancy model is called 2N.

CA systems can compensate for many different sorts of failures. A fault tolerant system can automatically detect a failure of

- A hard drive

- A computer processor unit

- A I/O subsystem

- A power supply

- A network component

The failure point can be immediately identified, and a backup component or procedure can take its place instantly without interruption in service.

Clustering software can be used to group together two or more servers to act as a single virtual server, or you can create many other CA failover setups. For example, a cluster might be configured so that if one of the virtual servers fails, the others respond by temporarily removing the virtual server from the cluster. It then automatically redistributes the workload among the remaining servers until the downed server is ready to go online again.

An alternative to CA failover clusters is use of a “double” hardware server in which all physical components are duplicated. These servers perform calculations independently and simultaneously on the separate hardware systems. These “double” hardware systems perform synchronization by using a dedicated node that monitors the results coming from both physical servers. While this provides security, this option can be even more expensive than other options. Stratus, a maker of these specialized fault tolerant hardware servers, promises that system downtime won’t amount to more than 32 seconds each year. However, the cost of one Stratus server with dual CPUs for each synchronized module is estimated at approximately $160,000 per synchronized nodule.

Practical Applications of Failover Clusters

Ongoing Availability of Mission Critical Applications

Fault tolerant systems are a necessity for computers used in online transaction processing (OLTP) systems. OLTP, which demands 100 percent availability, is used in airline reservations systems, electronic stock trading, and ATM banking, for example.

Many other types of organizations also use either CA clusters or fault tolerant computers for mission critical applications, such as businesses in the fields of manufacturing, logistics, and retail. Applications include e-commerce, order management, and employee time clock systems.

For clustering applications and services requiring only “five nines” uptime, high availability clusters are generally regarded as adequate.

Disaster Recovery

Disaster recovery is another practical application for failover clusters. Of course, it’s highly advisable for failover servers to be housed at remote sites in the event that a disaster such as a fire or flood takes out all physical hardware and software in the primary data center.

In Windows Server 2016 and 2019, for example, Microsoft provides Storage Replica, a technology that replicates volumes between servers for disaster recovery. The technology includes a stretch failover feature for failover clusters spanning two geographic sites.

By stretching failover clusters, organizations can replicate among multiple data centers. If a disaster strikes at one location, all data continues to exist on failover servers at other sites.

Database Replication

According to Microsoft, the company originally introduced Windows Server Failover Cluster (WSFC) in Windows Server 2016 to protect “mission-critical” applications such as its SQL Server database and Microsoft Exchange communications server.

Other database providers offer failover cluster technology for database replication. MySQL Cluster, for example, includes a heartbeat mechanism for instant failure detection, typically within one second, to other nodes in the cluster with no service interruptions to clients. A geographic replication feature enables databases to be mirrored to remote locations.

Failover Cluster Types

VMWare Failover Clusters

Among the virtualization products available, VMware offers several virtualization tools for VM clusters. vSphere vMotion provides a CA architecture that exactly replicates a VMware virtual machine and its network between physical data center networks.

A second product, VMware vSphere HA, provides HA for VMs by pooling them and their hosts into a cluster for automatic failover. The tool also does not rely on external components like DNS, which reduces potential points of failure.

Windows Server Failover Cluster (WSFC)

You can create Hyper-V failover servers with the use of WFSC, a feature in Windows 2016 and 2019 that monitors clustered physical servers, providing failover if needed. WFSC also monitors clustered roles, formerly referred to as clustered applications and services. If a clustered role isn’t working correctly, it is either restarted or moved to another node.

WFSC includes Microsoft’s previous Cluster Shared Volume (CSV) technology to provide a consistent, distributed namespace for accessing shared storage from all nodes. In addition, WSFC supports CA file share storage for SQL Server and Microsoft Hyper-V cluster VMs. It also supports HA roles running on physical servers and Hyper-V cluster VMs.

SQL Server Failover Clusters

In SQL Server 2017, Microsoft introduced Always On, an HA solution that uses WSFC as a platform technology, registering SQL Server components as WSFC cluster resources. According to Microsoft, related resources are combined into a role which is dependent on other WSFC resources. WSFC can then identify and communicate the need to either restart a SQL Server instance or automatically fail it over to a different node.

Red Hat Linux Failover Clusters

OS makers other than Microsoft also provide their own failover cluster technologies. For example, Red Hat Enterprise Linux (RHEL) users can create HA failover clusters with the High Availability Add-On and Red Hat Global File System (GFS/GFS2). Support is provided for single-cluster stretch clusters spanning multiple sites as well as multi-site of “disaster-tolerant” clusters. The multi-site clusters generally use storage area network (SAN)-enabled data storage replication.

Skip to content

Implementing Failover Cluster in Windows Server 2019

Today’s “always-on” and “always available” IT environments require a highly available infrastructure design. Simply put in other words- you don’t want to have a single point of failure in your business-critical infrastructure. If so, it quickly becomes apparent that you are only as good as your weakest link. Redundancy needs to exist throughout the environment, including your physical infrastructure and applications.

When it comes to your hosts that run applications and services, you want to ensure you have multiple hosts that can provide redundancy when one of your hosts fails. This is true when you have hosts running applications like MS SQL Server or if you have hosts that are serving as virtual hosts for your virtualized environments.

In the world of Microsoft Windows, Windows Server Failover Cluster is the mechanism used for hosting applications and hypervisors in a highly available way so as to provide minimum disruptions when there is a failure in the environment.

Windows Server 2019 provides the most advanced Windows Server to date; as it provides the most advanced features in terms of Windows Failover capabilities. In this article let’s take a look at Windows Failover Clustering in general, What is it? What is Hyper-V Failover Cluster?, How do they work? What is new in Windows Server 2019 Failover Cluster along with all its use cases? as well as the implementation of the Windows Failover Cluster in Windows Server 2019.

What is Windows Failover Clustering?

In order to increase high-availability for your business-critical data using Windows Server, you need Failover Clustering in Windows Server to achieve it.

What is a failover cluster?

The failover cluster in Windows Server is a group of independent nodes that work together as a single logical unit to increase the availability and scalability of the roles and services that run on top of the failover cluster; this can be a well-known application like MS SQL Server or virtualization specific roles like Hyper-V.

A failover cluster is generally connected together via physical means as well as via software or at the application layer. The general advantage of running these applications on top of a failover cluster is if one or more cluster nodes fail, other nodes are there to pick up the application, service, or role of the failed node. This process is referred to as failover and hence it is named as “failover cluster”.

Hyper-V Failover Cluster

To put it in a simple way, A group of similar Hyper-V Servers/ Windows Servers enabled with Hyper-V roles connected and working together in order to balance the situation when one of the connected servers running any application, service or role goes down or fails; the hyper-v servers are referred as nodes in tech terms.

Working Mechanism of Failover Cluster

Failover clusters have an internal monitoring mechanism that constantly queries the nodes that are members of the failover clusters to ensure they are healthy, alive, and are functioning properly; this is referred to as “heartbeat”. Sometimes if a node becomes unhealthy, the clustered service or application that exists on the unhealthy node may be restarted to resolve the issue or completely fail over as mentioned above.

Failover Clusters provide another extremely powerful storage mechanism called Cluster Shared Volume (CSV). CSV allows providing shared storage between cluster nodes so that a consistent, distributed namespace is provided to access shared storage from all nodes. This provides the necessary multi-connected storage functionality for Hyper-V machines also.

Quorum in a failover clustering configuration is a special voting mechanism that helps to prevent a situation called split-brain.

What is split-brain? – A split-brain situation can develop in the case of a failure or impairment of any one of the cluster nodes. As mentioned above, there is a special type of communication that is maintained between the nodes of a Windows Server Failover Cluster. If anything happens then the nodes in the failover cluster are separated from one another, then the nodes may assume they need to take ownership of cluster resources.

Read more: Understanding Quorum in a Failover cluster

What is new in Windows Server 2019 Failover Cluster?

With each new release of Windows Server, Microsoft generally adds features and functionalities to the Failover Cluster feature. Windows Server 2019 is no exception; there have been some really great feature improvements with Windows Server 2019 that make the Failover Cluster feature even more powerful than previous releases.

Let’s check that out:

- Cluster sets – One of the great new features of the Windows Server 2019 software-defined data center solution is the ability to form cluster sets.

- Azure-aware clusters – Microsoft is making it easier and easier to run workloads inside the Azure IaaS environment. With Azure-aware clusters, Windows Failover Clusters can now detect while they are running inside Azure. When they are running in the Azure IaaS environment, they automatically optimize themselves to provide proactive failover and logging of Azure planned maintenance events, etc. Additionally, you no longer have to configure the load balancer with a dynamic network name.

- Cross-domain cluster migration – Windows Failover Clusters that are running on top of Windows Server 2019 can now be moved between different Active Directory domains. This is a long-requested feature for Windows Failover Clusters that opens many possibilities and eases the pains of domain consolidations, mergers, etc.

- USB witness – With the USB witness configuration, the two-node Windows Failover Cluster, you can use a simple USB device that is attached to a commodity network device such as a router, etc to provide the witness component for a quorum, this is called true two-node.

- Cluster infrastructure improvements – With Windows Server 2019, the CSV cache is enabled by default to boost the performance of virtual machines running on Cluster Shared Volumes. Additionally, there have been enhancements to allow the Windows Failover Cluster to have more ability and logic to detect problems with the cluster and to automatically repair it. This includes partitioned nodes and the use of network route detection also.

- Cluster Aware Updating supports Storage Spaces Direct – A great improvement to Windows Failover Clusters in Windows Server 2012 was the Cluster Aware Updating feature. This automates the process of applying software updates on clustered servers while maintaining the availability of the roles that are housed on the cluster. This feature has been improved with each release of Windows Server. Now with Windows Server 2019, the CAU feature recognizes and is integrated with Storage Spaces Direct (S2D). The feature orchestrates restarts of all servers in the cluster for maintenance operations including updates.

- File share witness enhancements – New enhancements, improvements, and failsafe with the file share witness have been implemented with Windows Server 2019. This includes using the file share witness in poor internet-connected remote locations, lack of shared drives, lack of a domain controller such as in a DMZ, workgroup or cross-domain clusters, as well as blocking DFS file shares.

- Cluster hardening – Communication within the cluster over SMB for CSV volumes and S2D leverages certificates to make the communication as secure as possible and eliminates the dependencies on New Technology LAN Manager (NTLM).

- Failover cluster no longer uses NTLM authentication – NTLM has gone by the way-side with Windows Server 2019 Failover Cluster authentication. With Windows Server 2019, failover clusters use Kerberos and certificate-based authentication exclusively.

What is a cluster set? – A loosely-coupled grouping of multiple failover clusters including compute, storage, and hyper-converged infrastructure that enables you to move virtual machines between clusters and different cluster sets.

With this newest version of Failover Clustering available in Windows Server 2019, there are many new enhancements to make note of. Perhaps one of the most common roles that are hosted on a Windows Server Failover Cluster is Hyper-V. This allows running virtual machines in a highly-available way; specific to Hyper-V.

Prerequisites for Installing the Windows Failover Clustering

Before installing the Windows feature component, you need to verify the prerequisites. What are those? The following are generally noted by Microsoft as the prerequisites of installing the Windows Failover Clustering feature and those specific to Hyper-V:

- Install the same version of Windows Server for all Failover Cluster nodes

- Have servers with the same or similar hardware configurations

- Make sure storage and network components are adequate for connections, etc

- Shared storage – Failover clustering requires shared storage, either in the form of Storage Spaces Direct (S2D) or shared storage. The shared storage can be traditional shared storage via SAN devices with iSCSI and NFS targets as well as with new software-defined approaches such as Storage Spaces Direct.

- Attached storage should contain multiple physical disks that are configured in a way that provides redundancy. Some configurations may utilize a disk or logical storage as a disk witness

- Basic, not dynamic disks are supported

- With Cluster Shared Volumes, use NTFS- with S2D, it is recommended to use ReFS

- Especially for software-defined Windows Failover Cluster solutions (i.e. Storage Spaces Direct, etc), use WSSD certified hardware solutions

- https://www.microsoft.com/en-us/cloud-platform/software-defined-datacenter

- If you are running specialized Windows Failover Clusters such as Storage Spaces Direct (S2D), you have to pay close attention to the hardware requirements as S2D has very specific requirements

- For Hyper-V specific clusters, the cluster servers must support the hardware requirements for the Hyper-V role which includes having processors with hardware-assisted virtualization. This includes Intel Virtualization Technology (Intel VT) or AMD Virtualization (AMD-V) technology. Additionally, Hardware-enforced DEP must also be enabled.

How to Implement Failover Clustering in Windows Server?

In the following walkthrough, let’s take a look at the implementation of failover clustering in Windows Server 2019. This involves a few steps as that of the following:

- Installing the same version of Windows Server and patches on at least two failover cluster nodes.

- Deciding on domain-joined, multi-domain, or workgroup clusters.

- Configuring shared storage between the failover cluster nodes.

- Installing the Failover Cluster feature and Role services you want to cluster (Hyper-V, etc…).

- Testing the cluster configuration.

- Creating the Failover Cluster.

- Configuring quorum.

You can find brief explanations for the above-said steps in the following sections:

-

Install Windows Server 2019 and Patches

Let’s skip past the point of installing Windows Server as we have already installed Windows Server 2019 on two failover cluster hosts. One important point to note is that you want to make sure your failover cluster hosts are running the same version of Windows Server and also are at the same patch level. This ensures that everything between the hosts operates consistently and there is no unexpected behavior or variance between your nodes.

Ensure your failover cluster hosts are running the same Windows version and patch level.

-

Active Directory Domain Join?

With Windows Server 2016, Microsoft opened up some new and very exciting capabilities with Windows Server Failover Clusters in the realm of providing domain join flexibility.

Starting with Windows Server 2016 and extended to 2019, you can have clusters that are domain-joined, cross-domain joined (multi-domain), or workgroup clusters. For the lab walkthrough, we are using the typical domain-joined cluster configuration. Just note the other options that are now available.

Both cluster hosts are joined to the domain.

-

Shared Storage

Below, there are two shared drives that are mounted via iSCSI connections to SAN storage. As you can see, there is a volume mounted for quorum purposes and a larger volume that will be used to actually store data for Hyper-V virtual machines.

You will want to ensure all cluster hosts have connectivity to the shared volumes so that cluster failover, quorum, and other processes function as needed.

When the cluster is created, the cluster wizard is generally effective at choosing the disk you want to use for quorum purposes (smallest disk, etc). However, you can manually assign the disk for a quorum as well in the Configure Cluster Quorum Wizard found in the Failover Cluster Manager.

Right-click on the Failover Cluster name in the Failover Cluster Manager > More Actions > Configure Cluster Quorum Settings.

Manually assigning quorum

Mounting two volumes for failover cluster shared storage.

-

Install Hyper-V and Other Roles to Cluster

Since these two nodes will serve as Hyper-V hosts, we will install the Hyper-V Role on each host to get ready to add both to the failover cluster for hosting virtual machines. You can use Server Manager to install Roles/Features, however, PowerShell is a great way to quickly and easily install Windows Server components such as roles and features. To install Hyper-V, use the following one-liner.

- Install-WindowsFeature -Name Hyper-V -IncludeAllSubFeature-IncludeManagementTools -Restart

Installing the Hyper-V role on the failover cluster hosts

-

Install Failover Clustering Feature

Let’s now install the Failover Clustering feature as well as the management tools (Failover Cluster Manager) to manage the failover clustering feature. Again, PowerShell is a great way to do this. Use the following PowerShell one-liner:

- Install-WindowsFeature –Name Failover-Clustering –IncludeManagementTools

Install the Failover Clustering feature.

-

Testing the Failover Cluster Configuration

One of the tools provided by Microsoft that is helpful when configuring a Windows Server Failover Cluster is the Validate Configuration tool that can be found in the Failover Cluster Manager. This helps to shed light on any issues with the configuration before creating the cluster.

The validation runs extensive tests on very common problem areas of cluster configurations, including network configuration as well as storage configuration. It ensures that the shared storage configured meets the requirements needed by Windows Server failover clustering, including iSCSI Persistent reservations.

Using the Validate Cluster tool to validate the failover cluster configuration,

you can also use PowerShell to run the validation against the prospective failover cluster hosts.- Test-Cluster < node1 >,< node2 >

Running the Test-Cluster cmdlet against your cluster hosts before creating the failover cluster.

The Validation process creates a report located on the cluster host it was running from, The Validation report is created in the C:\Windows\Cluster\Reports directory on the failover cluster host.

Viewing the Validation report created

The validation process creates a very detailed report divided up into the major sections of validation. This includes the Hyper-V configuration, inventory, network, storage, system configuration, etc. You will want to make note of any errors or warnings in the report to make sure these are corrected before proceeding with the failover cluster configuration.

Viewing the failover cluster validation report

-

Creating the Failover Cluster

Once you have verified the cluster configuration and resolved any issues found, you are ready to create the failover cluster. Creating the Windows Server Failover Cluster is easily accomplished in PowerShell:

- New-Cluster -Name HyperVCluster -node < node1 >,< node2 > -staticAddress < IP Address >

Creating a new Windows Server Failover Cluster

After the cluster is created, you can verify that the Active Directory object was created and that you see the cluster in the Failover Cluster Manager.

Thus, a New Failover Cluster computer object is created in Active Directory.

Concluding Thoughts

Windows Server Failover Clusters provide a very robust and resilient platform to run business-critical applications and services. With each release of the Windows Server platform, failover clustering has continued to be enhanced. And in the release of Windows Server 2019 has the most feature-rich failover clustering platform to date.

No matter how resilient your platform from a high-availability perspective, you must ensure your data is protected. This means you should have effective backups of your mission-critical data running on your Windows Server Failover Clusters, including Hyper-V virtual machines.

Once such an effective backup solution in the market is the Vembu BDR Suite which allows you to ensure complete data-availability for your Hyper-V environments that include standalone Hyper-V hosts to multiple Windows Server Failover Clusters hosting the Hyper-V role.

In Vembu BDR Suite, even when your virtual machines are moved to another Hyper-V host, the backups will continue to function without interruptions. Used in conjunction with the native high availability features within Hyper-V, the Vembu Backup for Microsoft Hyper-V ensures data availability for your Hyper-V production workloads even at times of disasters.

Vembu BDR Suite provides a very robust backup solution with the enterprise-class features for protecting your Hyper-V clusters effectively at a surprising price range. Download a free, fully-featured trial of Vembu BDR Suite.

Follow our Twitter and Facebook feeds for new releases, updates, insightful posts and more.

Время на прочтение

13 мин

Количество просмотров 40K

Автор статьи – Роман Левченко (www.rlevchenko.com), MVP – Cloud and Datacenter Management

Всем привет! Совсем недавно была объявлена глобальная доступность Windows Server 2016, означающая возможность уже сейчас начать использование новой версии продукта в Вашей инфраструктуре. Список нововведений довольно обширный и мы уже описывали часть из них (тут и тут), но в этой статье разберем службы высокой доступности, которые, на мой взгляд, являются самыми интересными и используемыми (особенно в средах виртуализации).

Cluster OS Rolling upgrade

Миграция кластера в прошлых версиях Windows Server является причиной значительного простоя из-за недоступности исходного кластера и создания нового на базе обновленной ОС на узлах с последующей миграцией ролей между кластерами. Такой процесс несет повышенные требования к квалификации персонала, определенные риски и неконтролируемые трудозатраты. Данный факт особенно касается CSP или других заказчиков, которые имеют ограничения по времени недоступности сервисов в рамках SLA. Не стоит описывать, что для поставщика ресурсов означает значительное нарушение SLA )

Windows Server 2016 ситуацию исправляет через возможность совмещения Windows Server 2012 R2 и Windows Server 2016 на узлах в рамках одного кластера во время его апгрейда (Cluster OS Rolling Upgrade (далее CRU)).

Из названия можно догадаться, что процесс миграции кластера заключается в основном в поэтапной переустановке ОС на серверах, но об этом поговорим подробнее чуть позже.

Определим сначала список «плюшек», которые CRU предоставляет:

- Полное отсутствие простоя при апгрейде кластеров WS2012R2 Hyper-V/SOFS. Для других кластерных ролей (к примеру, SQL Server) возможна их недоступность (менее 5 минут), необходимая для отработки разового failover.

- Нет необходимости в дополнительном аппаратном обеспечении. Как правило, кластер строится из учета возможной недоступности одного или нескольких узлов. В случае с CRU, недоступность узлов будет планируемой и поэтапной. Таким образом, если кластер может безболезненно пережить временное отстутствие хотя бы 1 из узлов, то для достижения zero-downtime дополнительных узлов не требуется. Если планируется апгрейд сразу нескольких узлов (это поддерживается), то необходимо заранее спланировать распределение нагрузки между доступными узлами.

- Создание нового кластера не требуется. CRU использует текущий CNO.

- Процесс перехода обратим (до момента повышения уровня кластера).

- Поддержка In-Place Upgrade. Но, стоит отметить, что рекомендуемым вариантом обновления узлов кластера является полноценная установка WS2016 без сохранения данных (clean-os install). В случае с In-Place Upgrade обязательна проверка полной функциональности после обновления каждого из узлов (журналы событий и т.д.).

- CRU полностью поддерживается VMM 2016 и может быть автоматизирован дополнительно через PowerShell/WMI.

Процесс CRU на примере 2-х узлового кластера Hyper-V:

- Рекомендуется предварительное резервное копирование кластера (БД) и выполняемых ресурсов. Кластер должен быть в работоспособном состоянии, узлы доступны. При необходимости следует исправить имеющиеся проблемы перед миграцией и приостановить задачи резервного копирования перед стартом перехода.

- Обновить узлы кластера Windows Server 2012 R2, используя Cluster Aware Updating (CAU) или вручную через WU/WSUS.

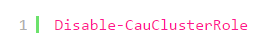

- При имеющемся настроенном CAU необходимо временное его отключение для предотвращения его возможного воздействия на размещение ролей и состояния узлов во время перехода.

- CPU на узлах должны иметь поддержку SLAT для поддержки выполнения виртуальных машин в рамках WS2016. Данное условие является обязательным.

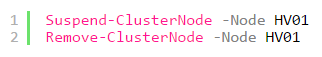

- На одном из узлов выполняем перенос ролей (drain roles) и исключение из кластера (evict):

- После исключения узла из кластера выполняем рекомендуемую полную установку WS2016 (clean OS install, Custom: Install Windows only (advanced))

- После переустановки верните сетевые параметры обратно*, обновите узел и установите необходимые роли и компоненты. В моем случае требуется наличие роли Hyper-V и, конечно, Failover Clustering.

New-NetLbfoTeam -Name HV -TeamMembers tNIC1,tNIC2 -TeamingMode SwitchIndependent -LoadBalancingAlgorithm DynamicAdd-WindowsFeature Hyper-V, Failover-Clustering -IncludeManagementTools -RestartNew-VMSwitch -InterfaceAlias HV -Name VM -MinimumBandwidthMode Weight -AllowManagementOS 0* использование Switch Embedded Teaming возможно только после полного завершения перехода на WS2016.

- Добавьте узел в соответствующий домен.

Add-Computer -ComputerName HV01 -DomainName domain.com -DomainCredential domain\rlevchenko - Возвращаем узел в кластер. Кластер начнет работать в смешанном режиме поддержки функциональности WS2012R2 без поддержки новых возможностей WS2016. Рекомендуется завершить обновление оставшихся узлов в течение 4 недель.

- Перемещаем кластерные роли обратно на узел HV01 для перераспределения нагрузки.

- Повторяем шаги (4-9) для оставшейся ноды (HV02).

- После обновления узлов до WS2016 необходимо поднять функциональный уровень (Mixed Mode – 8.0, Full – 9.0) кластера для завершения миграции.

PS C:\Windows\system32> Update-ClusterFunctionalLevel

Updating the functional level for cluster hvcl.

Warning: You cannot undo this operation. Do you want to continue?

[Y] Yes [A] Yes to All [N] No [L] No to All [S] Suspend [?] Help (default is Y): aName

— Hvcl - (опционально и с осторожностью) Обновление версии конфигурации ВМ для включения новых возможностей Hyper-V. Требуется выключение ВМ и желателен предварительный бекап. Версия ВМ в 2012R2 – 5.0, в 2016 RTM – 8.0.В примере показана команда для обновления всех ВМ в кластере:

Get-ClusterGroup|? {$_.GroupType -EQ "VirtualMachine"}|Get-VM|Update-VMVersionПеречень версий ВМ, поддерживаемые 2016 RTM:

Cloud Witness

В любой кластерной конфигурации необходимо учитывать особенности размещения Witness для обеспечения дополнительного голоса и общего кворума. Witness в 2012 R2 может строиться на базе общего внешнего файлового ресурса или диска, доступных каждому из узлов кластера. Напомню, что необходимость конфигурации Witness рекомендована при любом количестве узлов, начиная с 2012 R2 (динамический кворум).

В Windows Server 2016 для обеспечения возможности построения DR на базе Windows Server и для других сценариев доступна новая модель кворумной конфигурации на базе Cloud Witness.

Cloud Witness использует ресурсы Microsoft Azure (Azure Blob Storage, через HTTPS, порты на узлах должны быть доступны) для чтения/записи служебной информации, которая изменяется при смене статуса кластерных узлов. Наименование blob-файла производится в соответствии с уникальным идентификатором кластера, — поэтому один Storage Account можно предоставлять нескольким кластерам сразу (1 blob-файл на кластер в рамках создаваемого автоматически контейнера msft-cloud-witness). Требования к размеру облачного хранилища минимальны для обеспечения работы witness и не требует больших затрат на его поддержку. Так же размещение в Azure избавляет от необходимости третьего сайта при конфигурации Stretched Cluster и решения по его аварийному восстановлению.

Cloud Witness может применяться в следующих сценариях:

- Для обеспечения DR кластера, размещенного в разных сайтах (multi-site).

- Кластеры без общего хранилища (Exchange DAG, SQL Always-On и другие).

- Гостевые кластеры, выполняющиеся как в Azure, так и в on-premises.

- Кластеры хранения данных с или без общего хранилища (SOFS).

- Кластеры в рамках рабочей группы или разных доменах (новая функциональность WS2016).

Процесс создания и добавления Cloud Witness достаточно прост:

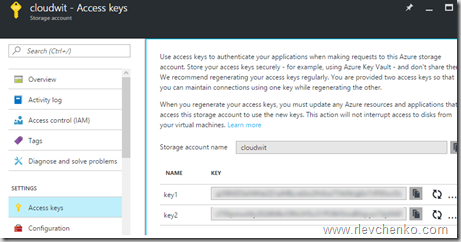

- Создайте новый Azure Storage Account (Locally-redundant storage) и в свойствах аккаунта скопируйте один из ключей доступа.

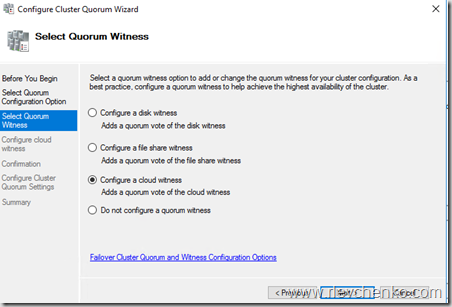

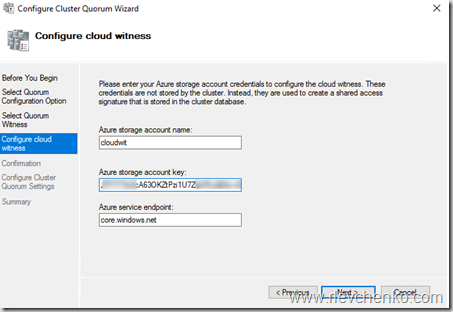

- Запустите мастер настройки кворумной конфигурации и выберите Select the Quorum Witness – Configure a Cloud Witness.

- Введите имя созданного storage account и вставьте ключ доступа.

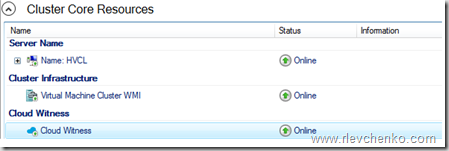

- После успешного завершения мастера конфигурации, Witness появится в Core Resources.

- Blob-файл в контейнере:

Для упрощения можно использовать PowerShell:

Workgroup and Multi-Domain Clusters

В Windows Server 2012 R2 и предыдущих версиях необходимо соблюдение глобального требования перед созданием кластера: узлы должны быть членами одного и того же домена. Active Directory Detached кластер, презентованный в 2012 R2, имеет подобное требование и не упрощает его существенным образом.

В Windows Server 2016 возможно создание кластера без привязки к AD в рамках рабочей группы или между узлами, являющиеся членами разных доменов. Процесс схож с созданием deattached -кластера в 2012 R2, но имеет некоторые особенности:

- Поддерживается только в рамках среды WS2016.

- Требуется роль Failover Clustering.

Install-WindowsFeature Failover-Clustering -IncludeManagementTools - На каждом из узлов требуется создать пользователя с членством в группе Administrators или использовать built-in уч. запись. Пароль и наименование пользователя должны быть идентичны.

net localgroup administrators cluadm /addПри появлении ошибки “Requested Registry access is not allowed” необходимо изменить значение политики LocalAccountTokenFilterPolicy.

New-ItemProperty -Path HKLM:\SOFTWARE\Microsoft\Windows\CurrentVersion\Policies\System -Name LocalAccountTokenFilterPolicy -Value 1 - Primary DNS -suffix на узлах должен быть определен.

- Создание кластера поддерживается как через PowerShell, так и через GUI.

New-Cluster -Name WGCL -Node rtm-1,rtm-2 -AdministrativeAccessPoint DNS -StaticAddress 10.0.0.100 - В качестве Witness возможно использование только Disk Witness или описанный ранее Cloud Witness. File Share Witness, к сожалению, не поддерживается.

Поддерживаемые сценарии использования:

| Роль | Статус поддержки | Комментарий |

|---|---|---|

| SQL Server | Поддерживается | Рекомендуется использовать встроенную аутентификацию SQL Server |

| File Server | Поддерживается, но не рекомендуется | Отсутствие Kerberos аутентификации, являющейся основной для SMB |

| Hyper-V | Поддерживается, но не рекомендуется | Доступна только Quick Migration. Live Migration не поддерживается |

| Message Queuing (MSMQ) | Не поддерживается | MSMQ требуется ADDS |

Virtual Machine Load Balancing / Node Fairness

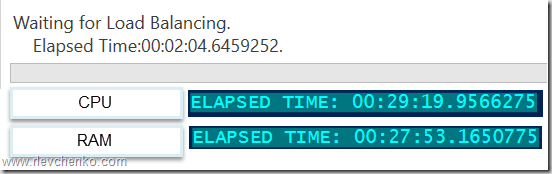

Динамическая оптимизация, доступная в VMM, частично перекочевала в Windows Server 2016 и предоставляет базовое распределение нагрузки на узлах в автоматическом режиме. Для перемещения ресурсов используется Live Migration и эвристики, на базе которых кластер каждые 30 минут решает проводить балансировку или нет:

- Текущий % использования памяти на узле.

- Средняя загрузка по CPU в 5 минутном интервале.

Предельные допустимые значения загрузки определяются значением AutoBalancerLevel:

get-cluster| fl *autobalancer*

AutoBalancerMode : 2

AutoBalancerLevel : 1

| AutoBalancerLevel | Агрессивность балансировки | Комментарий |

|---|---|---|

| 1 (по умолчанию) | Low | Осуществлять балансировку при загрузке узла более 80% по одной из эвристик |

| 2 | Medium | При загрузке более 70% |

| 3 | High | При загрузке более 60% |

Параметры балансировщика можно определить и в GUI (cluadmin.msc). По умолчанию, используется Low уровень агрессивности и режим постоянной балансировки.

Для проверки я использую следующие параметры:

AutoBalancerLevel: 2

(Get-Cluster).AutoBalancerLevel = 2AutoBalancerMode: 2

(Get-Cluster).AutoBalancerMode = 2Имитируем нагрузку сначала по CPU (около 88%) и затем по RAM (77%). Т.к. определен средний уровень агрессивности при принятии решения о балансировке и наши значения по загрузке выше определенного значения (70%) виртуальные машины на загруженном узле должны переехать на свободный узел. Скрипт ожидает момент живой миграции и выводит затраченное время (от точки начала загрузки на узла до осуществления миграции ВМ).

В случае с большой нагрузкой по CPU балансировщик переместил более 1 ВМ, при нагрузке RAM – 1 ВМ была перемещена в рамках обозначенного 30 минутного интервала, в течение которого происходит проверка загрузки узлов и перенос ВМ на другие узлы для достижения <=70% использования ресурсов.

При использовании VMM встроенная балансировка на узлах автоматически отключается и заменяется на более рекомендуемый механизм балансировки на базе Dynamic Optimization, который позволяет расширенно настроить режим и интервал выполнения оптимизации.

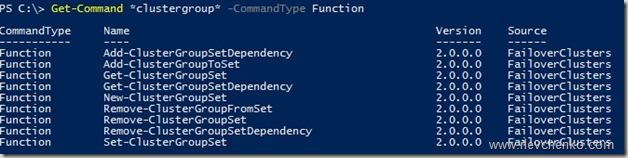

Virtual machine start ordering

Изменение логики старта ВМ в рамках кластера в 2012 R2 строится на понятии приоритетов (low,medium,high), задача которых обеспечивать включение и доступность более важных ВМ перед запуском остальных «зависимых» ВМ. Обычно это требуется для multi-tier сервисов, построенных, к примеру, на базе Active Directory, SQL Server, IIS.

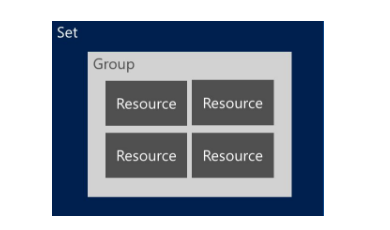

Для повышения функциональности и эффективности в Windows Server 2016 добавлена возможность определять зависимости между ВМ или группами ВМ для решения обеспечения корректного их старта, используя Set или наборы кластерных групп. Преимущественно нацелены на использование совместно с ВМ, но могут быть использованы и для других кластерных ролей.

Для примера используем следующий сценарий:

1 ВМ Clu-VM02 является приложением, зависимым от доступности Active Directory, выполняемой на вирт. машине Clu-VM01. А ВМ Clu-VM03, в свою очередь, зависит от доступности приложения, расположенного на ВМ Clu-VM02.

Создадим новый set, используя PowerShell:

ВМ с Active Directory:

PS C:\> New-ClusterGroupSet -Name AD -Group Clu-VM01

Name: AD

GroupNames: {Clu-VM01}

ProviderNames: {}

StartupDelayTrigger: Delay

StartupCount: 4294967295

IsGlobal: False

StartupDelay: 20

Приложение:

New-ClusterGroupSet -Name Application -Group Clu-VM02

Зависимый сервис от приложения:

New-ClusterGroupSet -Name SubApp -Group Clu-VM03

Добавляем зависимости между set’ами:

Add-ClusterGroupSetDependency -Name Application -Provider AD

Add-ClusterGroupSetDependency -Name SubApp -Provider Application

В случае необходимости можно изменить параметры set’а, используя Set-ClusterGroupSet. Пример:

Set-ClusterGroupSet Application -StartupDelayTrigger Delay -StartupDelay 30StartupDelayTrigger определяет действие, которое необходимо произвести после старта группы:

- Delay – ожидать 20 секунд (по умолчанию). Используется совместно с StartupDelay.

- Online – ожидать состояния доступности группы в set.

StartupDelay – время задержки в секундах. 20 секунд по умолчанию.

isGlobal – определяет необходимость запуска set’а перед стартом других наборов кластерных групп (к примеру, set с группами ВМ Active Directory должен быть глобально доступен и, следовательно, стартовать раньше других коллекций).

Попробуем стартовать ВМ Clu-VM03:

Происходит ожидание доступности Active Directory на Clu-VM01 (StartupDelayTrigger – Delay, StartupDelay – 20 секунд)

После запуска Active Directory происходит запуск зависимого приложения на Clu-VM02 (StartupDelay применяется и на этом этапе).

И последним шагом является запуск самой ВМ Clu-VM03.

VM Compute/Storage Resiliency

В Windows Server 2016 появились новые режимы работы узлов и ВМ для повышения степени их устойчивости в сценариях проблемного взаимодействия между кластерными узлами и для предотвращения полной недоступности ресурсов за счет реакции на «малые» проблемы перед возникновением более глобальных (проактивное действие).

Режим изоляции (Isolated)

На узле HV01 внезапно стала недоступна служба кластеризации, т.е. у узла появляются проблемы интра-кластерного взаимодействия. При таком сценарии узел помещается в состояние Isolated (ResiliencyLevel) и временно исключается из кластера.

Виртуальные машины на изолированном узле продолжают выполняться* и переходят в статус Unmonitored (т.е. служба кластера не «заботится» о данных ВМ).

*При выполнении ВМ на SMB: статус Online и корректное выполнение (SMB не требует «кластерного удостоверения» для доступа). В случае с блочным типом хранилища ВМ уходят статус Paused Critical из-за недоступности Cluster Shared Volumes для изолированного узла.

Если узел в течение ResiliencyDefaultPeriod (по умолчанию 240 секунд) не вернет службу кластеризации в строй (в нашем случае), то он переместит узел в статус Down.

Режим карантина (Quarantined)

Предположим, что узел HV01 успешно вернул в рабочее состояние службу кластеризации, вышел из Isolated режим, но в течение часа ситуация повторилась 3 или более раза (QuarantineThreshold). При таком сценарии WSFC поместит узел в режим карантина (Quarantined) на дефолтные 2 часа (QuarantineDuration) и переместит ВМ данного узла на заведомо «здоровый».

При уверенности, что источник проблем был ликвидирован, можем ввести узел обратно в кластер:

Важно отметить, что в карантине одновременно могут находиться не более 25% узлов кластера.

Для кастомизации используйте вышеупомянутые параметры и cmdlet Get-Cluster:

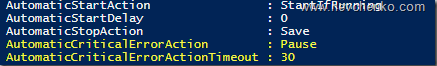

(Get-Cluster). QuarantineDuration = 1800Storage Resiliency

В предыдущих версиях Windows Server отработка недоступности r/w операций для вирт. диска (потеря соединения с хранилищем) примитивная – ВМ выключаются и требуется cold boot на последующем старте. В Windows Server 2016 при возникновении подобных проблем ВМ переходит в статус Paused-Critical (AutomaticCriticalErrorAction), предварительно «заморозив» своё рабочее состояние (её недоступность сохранится, но неожиданного выключения не будет).

При восстановлении подключения в течение таймаута (AutomaticCriticalErrorActionTimeout, 30 минут по умолчанию), ВМ выходит из paused-critical и становится доступной с той «точки», когда проблема была идентифицирована (аналогия – pause/play).

Если таймаут будет достигнут раньше возвращения хранилища в строй, то произойдет выключение ВМ (действие turn off)

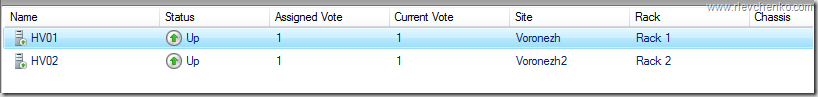

Site-Aware/Stretched Clusters и Storage Replica

Тема, заслуживающая отдельного поста, но постараемся кратко познакомиться уже сейчас.

Ранее нам советовали сторонние решения (много $) для создания полноценных распределенных кластеров (обеспечение SAN-to-SAN репликации). С появлением Windows Server 2016 сократить бюджет в разы и повысить унификацию при построении подобных систем становится действительностью.

Storage Replica позволяет осуществлять синхронную (!) и асинхронную репликацию между любыми системами хранения (включая Storage Spaces Direct) и поддерживающая любые рабочие нагрузки, — лежит основе multi-site кластеров или полноценного DR -решения. SR доступна только в редакции Datacenter и может применяться в следующих сценариях:

Использование SR в рамках распределенного кластера особенно ещё наличием автоматической отработки по отказу и тесной работы с site-awareness, который был презентован так же в Windows Server 2016. Site-Awarieness позволяет определять группы узлов кластера и привязывать их к физическому месторасположению (site fault domain/сайт) для формирования кастомных политик отказа (failover), размещения данных Storage Spaces Direct и логики распределения VM. Кроме того, возможна привязка не только на уровне сайтов, но и на более низкие уровни (node, rack, chassis).

New-ClusterFaultDomain –Name Voronezh –Type Site –Description “Primary” –Location “Voronezh DC”

New-ClusterFaultDomain –Name Voronezh2 –Type Site –Description “Secondary” –Location “Voronezh DC2”

New-ClusterFaultDomain -Name Rack1 -Type Rack

New-ClusterFaultDomain -Name Rack2 -Type Rack

New-ClusterFaultDomain -Name HPc7000 -type Chassis

New-ClusterFaultDomain -Name HPc3000 -type Chassis

Set-ClusterFaultDomain –Name HV01 –Parent Rack1

Set-ClusterFaultDomain –Name HV02 –Parent Rack2

Set-ClusterFaultDomain Rack1,HPc7000 -parent Voronezh

Set-ClusterFaultDomain Rack2,HPc3000 -parent Voronezh2Такой подход в рамках мульти-сайт кластера несет следующие плюсы:

- Отработка Failover первоначально происходит между узлами в рамках Fault домена. Если все узлы в Fault Domain недоступны, то только тогда переезд на другой.

- Draining Roles (миграция ролей при режиме обслуживания и т.д.) проверяет возможность переезда сначала на узел в рамках локального сайта и только потом перемещает их на иной.

- Балансировка CSV (перераспределение кластерных дисков между узлами) так же будет стремиться отрабатывать в рамках родного fault-домена/сайта.

- ВМ будут стараться располагаться в том же сайте, где и их зависимые CSV. Если CSV мигрируют на другой сайт, то ВМ через 1 минуту начнут свою миграцию на тот же сайт.

Дополнительно, используя логику site-awareness, возможно определение «родительского» сайта для всех вновь создаваемых ВМ/ролей:

(Get-Cluster).PreferredSite = <наименование сайта>Или настроить более гранулярно для каждой кластерной группы:

(Get-ClusterGroup -Name ИмяВМ).PreferredSite = <имя предпочтительного сайта>Другие нововведения

- Поддержка Storage Spaces Direct и Storage QoS.

- Изменение размера shared vhdx для гостевых кластеров без простоя, поддержка Hyper-V репликации и рез. копирования на уровне хоста.

- Улучшенная производительность и масштабирование CSV Cache с поддержкой tiered spaces, storage spaces direct и дедупликации (отдать десятки ГбБ RAM под кеш – без проблем).

- Изменения в формировании журналов кластера (информация о временном поясе и т.д.) + active memory dump (новая альтернатива для full memory dump) для упрощения диагностирования проблем.

- Кластер теперь может использовать несколько интерфейсов в рамках одной и той же подсети. Конфигурировать разные подсети на адаптерах не требуется для их идентификации кластером. Добавление происходит автоматически.

На этом наш обзорный тур по новым функциям WSFC в рамках Windows Server 2016 завершен. Надеюсь, что материал получился полезным. Спасибо за чтение и комментарии.

Отличного всем дня!