Проблема Emfile too many open files встречается достаточно часто у пользователей операционной системы Windows. Ошибка Emfile too many open files возникает, когда приложение пытается открыть больше файлов, чем разрешено операционной системой. Это может привести к тому, что программа не будет функционировать должным образом или даже завершится с ошибкой.

Главной причиной возникновения ошибки Emfile too many open files является ограничение на количество открытых файлов, установленное по умолчанию в операционной системе Windows. Если программа или приложение открывает слишком много файлов, то возникает данная ошибка. Обычно это происходит, когда программа открывает множество больших файлов или работает с сетевыми соединениями.

Существует несколько способов решения проблемы Emfile too many open files. Один из способов — увеличить ограничение на количество открытых файлов в системе. Для этого необходимо отредактировать файл конфигурации операционной системы и установить более высокое значение. Для программистов, работающих с Node.js, есть возможность использовать модуль ‘ulimit’ или установить переменную окружения NODE_NO_WARNINGS.

Содержание

- Emfile too many open files в Windows: как решить проблему

- Вред, наносимый Emfile too many open files

- Почему Emfile too many open files возникает в Windows

Emfile too many open files в Windows: как решить проблему

Проблема Emfile too many open files может возникнуть на компьютере с операционной системой Windows, когда количество одновременно открытых файлов превышает допустимый лимит. Это может быть вызвано различными факторами, включая настройки операционной системы, запущенные процессы и использование приложений.

Для решения проблемы Emfile too many open files в Windows можно применить несколько подходов:

- Изменение лимита открытых файлов в операционной системе. Для этого можно внести изменения в реестр Windows или в файл конфигурации программы, которая вызывает ошибку Emfile too many open files. Необходимо увеличить значение, чтобы увеличить количество одновременно открытых файлов.

- Закрытие лишних файлов и процессов. Если вы работаете с большим количеством файлов или процессов, то может возникнуть проблема Emfile too many open files. Рекомендуется закрыть неиспользуемые файлы и процессы, чтобы освободить ресурсы.

- Обновление операционной системы и приложений. В некоторых случаях, проблема Emfile too many open files может быть вызвана устаревшими версиями операционной системы или приложений. Рекомендуется установить последние обновления и версии программного обеспечения.

- Использование специализированного программного обеспечения. Существуют различные программы, которые могут помочь отслеживать и управлять открытыми файлами и процессами в операционной системе Windows. Такие программы позволяют оптимизировать использование ресурсов и предотвратить проблему Emfile too many open files.

Если вы столкнулись с проблемой Emfile too many open files в Windows, то приведенные выше методы могут помочь вам решить эту проблему. Важно следить за количеством одновременно открытых файлов и процессов, и регулярно проверять операционную систему и приложения на наличие обновлений.

Вред, наносимый Emfile too many open files

Когда компьютер или операционная система сталкиваются с ошибкой Emfile too many open files, это может иметь серьезные последствия для работоспособности и стабильности системы.

Одно из основных последствий этой ошибки — ограничение доступа к файлам. Когда количество открытых файлов превышает допустимое число, система начинает ограничивать доступ к новым файлам. Это может вызвать проблемы с выполнением программ и приложений, которые требуют доступа к большому количеству файлов одновременно, например, при работе с базами данных или сетевыми ресурсами.

Вместе с тем, ошибка Emfile too many open files может привести к снижению производительности системы. Признаки этого могут включать повышенное использование процессора или памяти, длительные задержки при выполнении операций чтения или записи файлов, а также возможные сбои и зависания в работе приложений.

Кроме того, данная ошибка может привести к потере данных. Если система не может открыть или обработать необходимые файлы, это может привести к потере значимых данных или нарушению целостности критических файлов. В случае использования системы для хранения и обработки важных данных, эти последствия могут быть катастрофическими и требовать восстановления данных из резервных копий или дополнительных усилий по восстановлению.

В целом, ошибка Emfile too many open files представляет серьезную проблему, которая требует немедленного решения. Она может нанести вред работоспособности системы, вызывая проблемы с доступом к файлам, снижение производительности и потерю данных, что может привести к серьезным последствиям для пользователя или организации.

Почему Emfile too many open files возникает в Windows

Эта ошибка может быть вызвана несколькими причинами:

- Неправильно освобождение ресурсов: Если приложение не закрывает файлы после использования, они остаются открытыми, что со временем может привести к превышению лимита открытых файлов.

- Баги в коде: Некорректный код приложения может привести к утечке ресурсов и неправильному управлению файлами, что приводит к накоплению открытых файлов и возникновению ошибки Emfile too many open files.

- Неправильная конфигурация операционной системы: В некоторых случаях, лимит открытых файлов может быть установлен слишком низко в конфигурационных файлах операционной системы.

Для решения проблемы Emfile too many open files в Windows можно предпринять следующие действия:

- Закрывать файлы после использования: Убедитесь, что ваше приложение закрывает файлы после их использования. Это можно сделать с помощью соответствующих команд или методов в коде вашей программы.

- Проверить код приложения: Отладьте ваш код и убедитесь в его правильности и отсутствии утечек ресурсов. Используйте соответствующие линтеры или инструменты для обнаружения ошибок и утечек памяти.

- Проверить конфигурацию операционной системы: Проверьте, что лимит открытых файлов в конфигурационных файлах операционной системы установлен достаточно высоко. Если это не так, отредактируйте соответствующие файлы для увеличения этого лимита.

Соблюдая эти рекомендации, вы сможете предотвратить возникновение ошибки Emfile too many open files и улучшить работу вашего приложения в операционной системе Windows.

Troubleshooting

Problem

This technote explains how to debug the «Too many open files» error message on Microsoft Windows, AIX, Linux and Solaris operating systems.

Symptom

The following messages could be displayed when the process has exhausted the file handle limit:

java.io.IOException: Too many open files

[3/14/15 9:26:53:589 EDT] 14142136 prefs W Could not lock User prefs. Unix error code 24.

New sockets/file descriptors can not be opened after the limit has been reached.

Cause

System configuration limitation.

When the «Too Many Open Files» error message is written to the logs, it indicates that all available file handles for the process have been used (this includes sockets as well). In a majority of cases, this is the result of file handles being leaked by some part of the application. This technote explains how to collect output that identifies what file handles are in use at the time of the error condition.

Resolving The Problem

Determine Ulimits

On UNIX and Linux operating systems, the ulimit for the number of file handles can be configured, and it is usually set too low by default. Increasing this ulimit to 8000 is usually sufficient for normal runtime, but this depends on your applications and your file/socket usage. Additionally, file descriptor leaks can still occur even with a high value.

Display the current soft limit:

ulimit -Sn

Display the current hard limit:

ulimit -Hn

Or capture a Javacore, the limit will be listed in that file under the name NOFILE:

kill -3 PID

Please see the following document if you would like more information on where you can edit ulimits:

Guidelines for setting ulimits (WebSphere Application Server)

http://www.IBM.com/support/docview.wss?rs=180&uid=swg21469413

Operating Systems

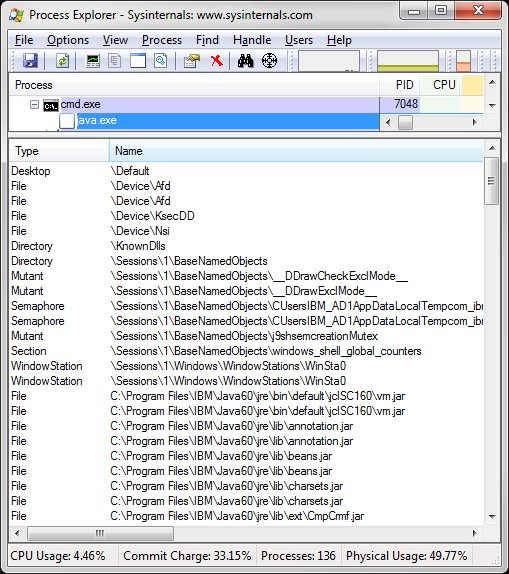

By default, Windows does not ship with a tool to debug this type of problem. Instead Microsoft provides a tool that you can download called Process Explorer. This tool identifies the open handles/files associated with the Java™ process (but usually not sockets opened by the Winsock component) and determines which handles are still opened. These handles result in the «Too many open files» error message.

To display the handles, click on the Gear Icon in the toolbar (or press CTRL+ H to toggle the handles view). The icon will change to the icon for DLL files (so you can toggle it back to the DLL view).

It is important that you change the Refresh Rate. Select View > Update Speed, and change it to 5 seconds.

There is also another Microsoft utility called Handle that you can download from the following URL:

https://technet.microsoft.com/en-us/sysinternals/bb896655.aspx

This tool is a command line version of Process Explorer. The URL above contains the usage instructions.

The commands lsof and procfiles are usually the best commands to determine what files and sockets are opened..

lsof

To determine if the number of open files is growing over a period of time, issue lsof to report the open files against a PID on a periodic basis. For example:

lsof -p [PID] -r [interval in seconds, 1800 for 30 minutes] > lsof.out

This output does not give the actual file names to which the handles are open. It provides only the name of the file system (directory) in which they are contained. The lsof command indicates if the open file is associated with an open socket or a file. When it references a file, it identifies the file system and the inode, not the file name.

It is best to capture lsof several times to see the rate of growth in the file descriptors.

procfiles

The procfiles command does provide similar information, and also displays the full filenames loaded. It may not show sockets in use.

procfiles -n [PID] > procfiles.out

Other commands (to display filenames that are opened)

INODES and DF

df -kP filesystem_from_lsof | awk ‘{print $6}’ | tail -1

>> Note the filesystem name

find filesystem_name -inum inode_from_lsof -print > filelist.out

>> Shows the actual file name

svmon

svmon -P PID -m | grep pers

(for JFS)

svmon -P PID -m | grep clnt

(for JFS2, NFS)

(this opens files in the format:

filesystem_device:inode

)

Use the same procedure as above for finding the actual file name.

To determine if the number of open files is growing over a period of time, issue lsof to report the open files against a PID on a periodic basis. For example:

lsof -p [PID] -r [interval in seconds, 1800 for 30 minutes] > lsof.out

The output will provide you with all of the open files for the specified PID. You will be able to determine which files are opened and which files are growing over time.

It is best to capture lsof several times to see the rate of growth in the file descriptors.

Alternately you can list the contents of the file descriptors as a list of symbolic links in the following directory, where you replace PID with the process ID. This is especially useful if you don’t have access to the lsof command:

ls -al /proc/PID/fd

Run the following commands to monitor open file (socket) descriptors on Solaris:

pfiles

/usr/proc/bin/pfiles [PID] > pfiles.out

lsof

lsof -p [PID] > lsof.out

This will get one round of lsof output. If you want to determine if the number of open files is growing over time, you can issue the command with the -r option to capture multiple intervals:

lsof -p [PID] -r [interval in seconds, 1800 for 30 minutes] > lsof.out

It is best to capture lsof several times to see the rate of growth in the file descriptors.

lsof

lsof -p [PID] > lsof.out

This will get one round of lsof output. If you want to determine if the number of open files is growing over time, you can issue the command with the -r option to capture multiple intervals:

lsof -p [PID] -r [interval in seconds, 1800 for 30 minutes] > lsof.out

It is best to capture lsof several times to see the rate of growth in the file descriptors.

[{«Product»:{«code»:»SSEQTP»,»label»:»WebSphere Application Server»},»Business Unit»:{«code»:»BU053″,»label»:»Cloud & Data Platform»},»Component»:»Java SDK»,»Platform»:[{«code»:»PF002″,»label»:»AIX»},{«code»:»PF010″,»label»:»HP-UX»},{«code»:»PF016″,»label»:»Linux»},{«code»:»PF027″,»label»:»Solaris»},{«code»:»PF033″,»label»:»Windows»}],»Version»:»9.0;8.5.5;8.5;8.0;7.0″,»Edition»:»Base;Express;Network Deployment»,»Line of Business»:{«code»:»LOB45″,»label»:»Automation»}},{«Product»:{«code»:»SSNVBF»,»label»:»Runtimes for Java Technology»},»Business Unit»:{«code»:»BU059″,»label»:»IBM Software w\/o TPS»},»Component»:»Java SDK»,»Platform»:[{«code»:»»,»label»:»»}],»Version»:»»,»Edition»:»»,»Line of Business»:{«code»:»LOB36″,»label»:»IBM Automation»}}]

If you use the standard C/C++ POSIX libraries with Windows, the answer is «yes», there is a limit.

However, interestingly, the limit is imposed by the kind of C/C++ libraries that you are using.

I came across with the following JIRA thread (http://bugs.mysql.com/bug.php?id=24509) from MySQL. They were dealing with the same problem about the number of open files.

However, Paul DuBois explained that the problem could effectively be eliminated in Windows by using …

Win32 API calls (CreateFile(),

WriteFile(), and so forth) and the

default maximum number of open files

has been increased to 16384. The

maximum can be increased further by

using the —max-open-files=N option at

server startup.

Naturally, you could have a theoretically large number of open files by using a technique similar to database connections-pooling, but that would have a severe effect on performance.

Indeed, opening a large number of files could be bad design. However, some situations call require it. For example, if you are building a database server that will be used by thousands of users or applications, the server will necessarily have to open a large number of files (or suffer a performance hit by using file-descriptor pooling techniques).

I have recently come across a nasty Too many open files error, and noticed the information on the internet about what that might mean or how to solve it doesn’t always paint a clear picture.

Here I try to put together a more complete guide by gathering all the resources I found as I was dealing with the issue myself.

What are file limits and file descriptors?

In Unix-like systems, each process is associated with sets of usage limits, which specify the amount of system

resources they can use. One of those is the limit of open file descriptors (RLIMIT_NOFILE

But what is a file descriptor?

When a file is opened by a process, a file descriptor is created. It is an abstract indicator used to access the file itself, and acts as its I/O interface. File descriptors index into a per-process file descriptor table maintained by the kernel.

When a process opens too many file descriptors without closing them, we stumble into the Too many open files

tl;dr: How to check current file limits

Once you get a Too many open files

$ cat /proc/<pid>/limits

There are two types of limits:

- Soft: the current limit itself

- Hard: the max value to which the soft limit may be raised (by unprivileged users)

Once you know the limits, you can get the actual number of files that are currently opened by your process like this:

$ ls -1 /proc/<PID>/fd | wc -l

Finally you can just compare the soft limit with the number of open file descriptors to confirm that your process is close to reaching the limit.

Once you have determined whether you have a bug in your application (see below how that might not be so obvious), or the limit is simply too low, you might decide you want to increase it.

Increasing process file limits

Open file limits for processes are normally set by:

- the kernel at boot time (the very first limit is set for the

initprocess). To see these limits:cat /proc/1/limits - they can be inherited from the parent process (when a process is forked with the system call

fork(2)init

Once a process is running, limits can be overridden in a number of ways, depending on your context.

Just for the current process and all its children

You can use the ulimit

$ ulimit -n <new limit>

You can optionally add the -H-S

Since ulimit

More info on the man page

For another running process

You can use the new(ish) utility prlimit

$ prlimit --pid 1 --nofile=1337:1337

Where the first number is the soft limit, and the second number is the hard limit.

You can also give it a command instead of a PID, and it will run that command with the given resources:

$ prlimit --nofile=1337:1337 echo hello

More info on the man page

For all processes of a certain user

You can replace the kernel/inherited values every time a user session is opened by using a Pluggable Authentication Modules (PAM) module called pam_limits.

Most major Linux distributions are already shipped with this module as part of their standard PAM configuration, and its role is to set parameters on system resources on a per-user (or per-group) basis.

You can configure it by editing the /etc/security/limits.conf

myuser hard nofile 20000 myuser soft nofile 15000

Tip: you can also use the wildcard * instead of the user name if you want the change to be applied to all users

After this, you need to edit the file /etc/pam.d/login

session required pam_limits.so

The changes will be permanent and survive across reboots. Note however that they can still be overridden for a single process by ulimit

From within your application (system calls)

If you need to fiddle with process limits from within your application itself, Linux exposes three system calls to do that (which are ultimately what’s used under the hood by ulimit or any other utility)

prlimitsetrlimitgetrlimit

More info on the man page

You should consult the documentation of your programming language of choice to see if they offer a wrapper for them.

If you’re using Docker

The ulimit

From inside a docker container, you cannot override file limits with ulimit

(This does not apply for containers running in privileged mode.)

However, you can start the container with its own custom limits in two ways:

As an argument to docker run command

You can use the flag (first number being the soft limit, and the second being the hard limit).--ulimit

$ docker run --ulimit nofile=1337:1337 <image-tag>

In docker-compose

The same option is available in docker-compose:

version: '3'

services:

myservice:

ulimits:

nofile:

soft: 1337

hard: 1337

Increasing system-wide file limits

In Linux systems there is also file-max

You might want to increase this limit too if you are dealing with several processes with high limit requirements, or if you just want to raise the ceiling of the limits you can set for a single process.

You can check its current value with:

$ cat /proc/sys/fs/file-max

(Note that this is just a number and there is no concept of hard or soft limit, as this is a setting for the kernel and we are not in a “per process” context)

And change its default value in the file /etc/sysctl.conf

fs.file-max=250000

If you want to apply the change right away, you can do so with:

$ sysctl -p

Why are file limits a thing?

The main reasons why Linux limits the number of open files are:

- The operating system needs memory to manage each open file descriptor, and memory is a limited resource. If you were to set limits that are too high and the system went out of memory, any remaining open files could be corrupted.

- Security: if any user process was able to keep opening files they could do so until the server goes down.

The default limits on most Linux distributions might be conservative, but you should still be careful when increasing them.

Debugging why your application might be reaching its file limits

Before you go increasing file limits left and right, you should consider whether your application might have a file descriptor leak, e.g. opening files without closing them. If it does, not only increasing file limits might not be a solution, it might actually make the problem worse.

Take for example this code which opens file descriptors in a loop without ever closing them:

int main(int argc, char **argv) {

char filename[100];

for (size_t i = 0; i < INT_MAX; ++i) {

sprintf(filename, "/tmp/%010ld.txt", i);

FILE *fp = fopen(filename, "w");

}

return 0;

}

If this is left running and there is no ceiling on the file limits, ultimately the memory of the system will fill with garbage file descriptors.

The correct behaviour would be to always invoke fclose

An application which opens regular files without ever closing them is an obvious example, but it is far from being the only one. In reality, you might be surprised at what kinds of things an application can do that rely on opening file descriptors.

Everything is a file

“Everything is a file” describes one of the defining features of Unix like systems – that a wide range of input/output resources are represented simple streams of bytes exposed through the filesystem API.

Unix files are able to represent:

• Regular files

• Directories

• Symbolic links

• Devices

• Pipes and named pipes

• Sockets

“Everything is a file”. However that term does the idea an injustice as it overstates the reality. Clearly everything is not a file. Some things are devices and some things are pipes and while they may share some characteristics with files, they certainly are not files. A more accurate, though less catchy, characterization would be “everything can have a file descriptor”. It is the file descriptor as a unifying concept that is key to this design. It is the file descriptor that makes files, devices, and inter-process I/O compatible.

– Neil Brown, Ghosts of Unix Past: a historical search for design patterns

It is apparent that file descriptors are a central part of the functioning of Unix-like operating systems, way beyond the day to day of writing and reading regular files. Therefore, we should consider every interaction with the underlying operating system as a potential for a file descriptor leakage.

In particular, you might want to check all integration points between your application and:

- I/O devices

- the network (especially long running requests!)

- other processes

- the filesystem

You can easily monitor the number of open file descriptors by periodically invoking a script such as lsof

$ ls -1 /proc/<PID>/fd | wc -l

Or by attaching a profiler to your application.

If the number of file descriptors keeps increasing rather than keeping stable, then this might suggest the application has a file descriptor leakage.

Resources and further reading:

- https://ops.tips/blog/proc-pid-limits-under-the-hood/

- https://subvisual.com/blog/posts/2020-06-06-fixing-too-many-open-files

- https://linoxide.com/linux-how-to/03-methods-change-number-open-file-limit-linux/

- https://serverfault.com/questions/122679/how-do-ulimit-n-and-proc-sys-fs-file-max-differ

Changing the folder settings may come in handy

by Madalina Dinita

Madalina has been a Windows fan ever since she got her hands on her first Windows XP computer. She is interested in all things technology, especially emerging technologies… read more

Updated on

- Encryption & data protection usually protects against unauthorized processing on your Windows PC.

- The error is often resolved by removing folder encryption or repairing damaged registry files.

If you got the error message Can’t open files in this folder because it contains system files, you have come to the right place. Sometimes it comes with the error code ERROR_TOO_MANY_OPEN_FILES.

Our team has dug into the file encryption error, and we will show you the best way to resolve the stubborn file error. We also will present potential causes so that you may avoid future occurrences.

What does Can’t open files in this folder because it contains system files message mean?

The message means that you may be trying to gain access to files from outside your privilege level. Sometimes this is intentional, as the OS uses it to keep system files safe; other times, it may be a bug. The following are the most likely triggers:

- File encryption – The Windows Installer package folder or the folder in which you want to install the Windows Installer package is encrypted.

- Wrong OS version – The temp folder (%TEMP%) is encrypted on a version of Windows other than Windows Vista. Tools like the Microsoft Encrypting File System Assistant may have encrypted the temp folder, triggering this error code.

- Antivirus conflicts – If you use an antivirus incompatible with your OS, you may encounter a file encryption error.

- Corrupted files – When certain system files become corrupted, it is hard to gain access and may trigger the error.

With the possible causes out of the way, let us explore solutions.

How can I fix Can’t open files in this folder because it contains system files?

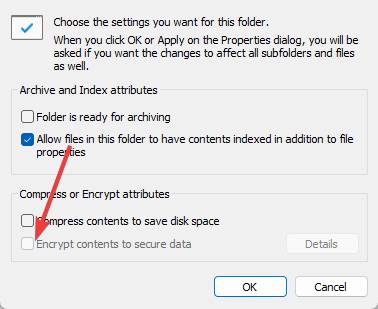

1. Remove folder encryption

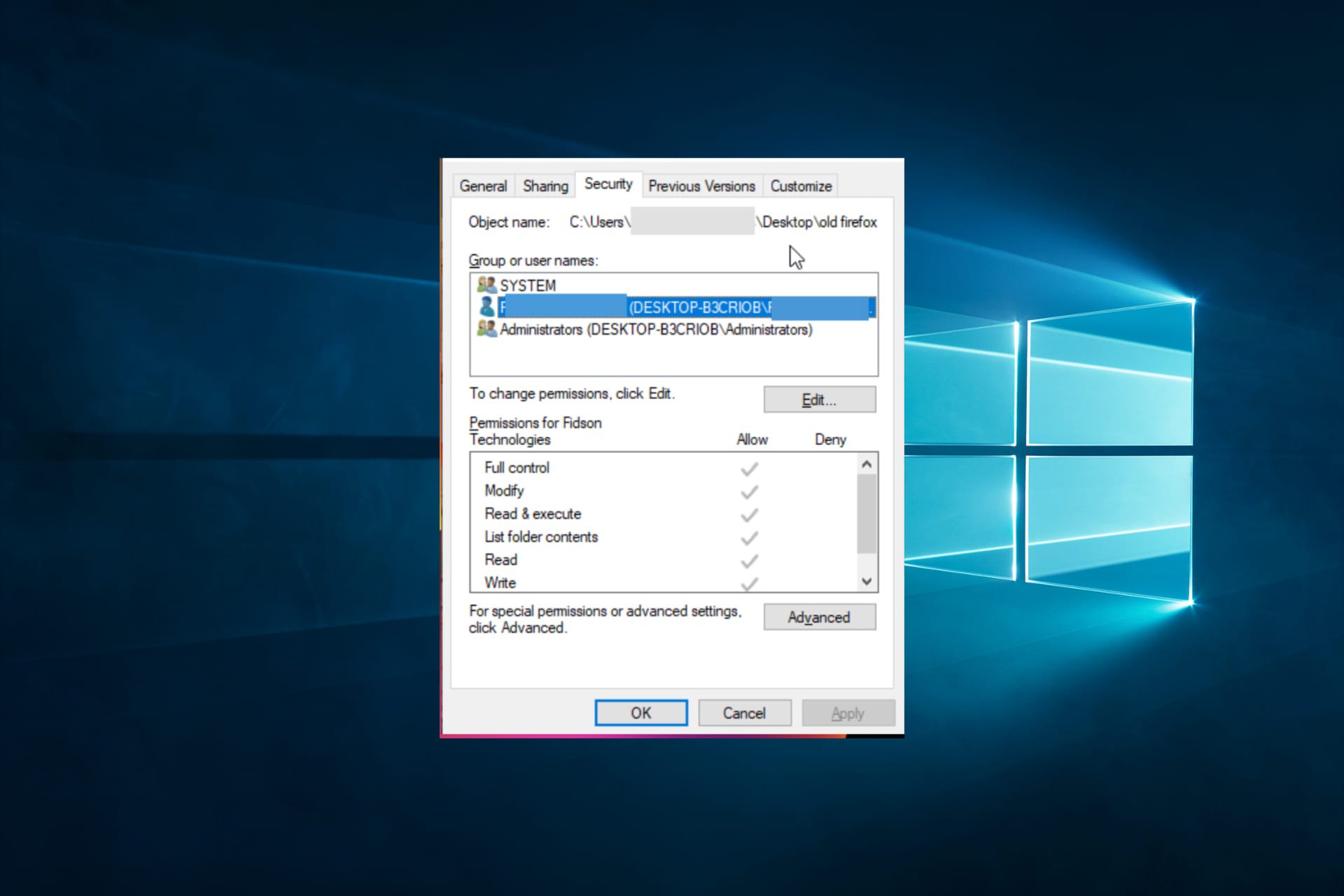

- Press Windows + E to open File Explorer, then navigate to the encrypted folder.

- Right-click on it and select Properties.

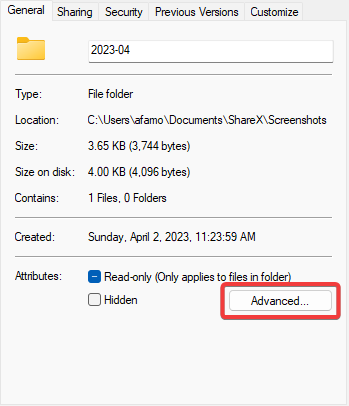

- Click the Advanced button.

- If checked, uncheck Encrypt contents to secure data.

- Click OK, then in the Properties windows, click Apply and OK.

Note that while performing the steps above, it is recommended you disconnect all peripherals from your computer. Sometimes, peripherals may cause various install issues; unplugging them is the best way to prevent this problem.

How we test, review and rate?

We have worked for the past 6 months on building a new review system on how we produce content. Using it, we have subsequently redone most of our articles to provide actual hands-on expertise on the guides we made.

For more details you can read how we test, review, and rate at WindowsReport.

If you’re still experiencing The system cannot open the file error after performing the above steps, continue with the following troubleshooting steps.

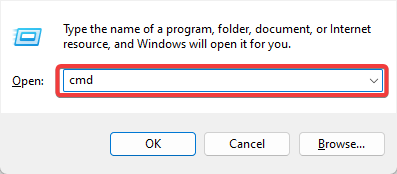

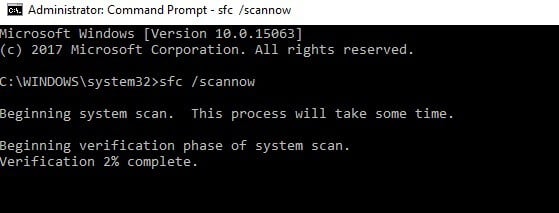

2. Repair your registry

- Press Windows + R, type cmd, and hit Ctrl + Shift + Enter.

- Type the script below and hit Enter.

sfc /scannow - Wait for the scanning process to complete, and then restart your computer. All corrupted files will be replaced on reboot.

If you haven’t installed any registry cleaner on your computer, check out our article on the best registry cleaners for Windows 10 PCs.

Otherwise, the simplest way to repair your registry is to use a dedicated tool like the one below. Remember to back up your registry first in case anything goes wrong.

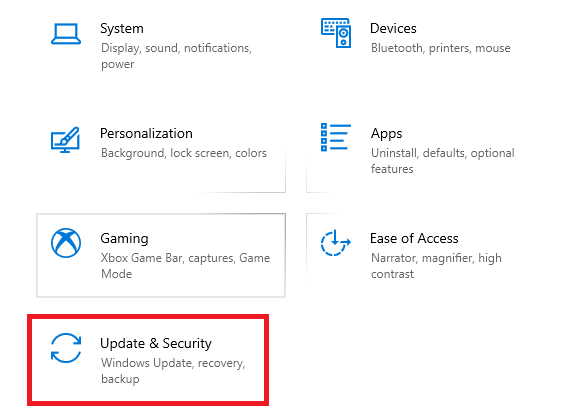

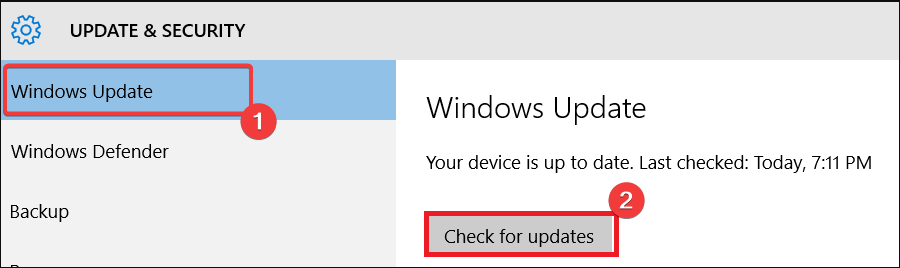

3. Update your OS

- Press Windows + I to open the system Settings.

- Click on Update & Security.

- Select Windows update from the left pane and choose Check for updates.

Ensure you’re running the latest Windows OS updates on your machine. As a quick reminder, Microsoft constantly rolls out Windows updates to improve the system’s stability and fix issues like Can’t open files in this folder because it contains system files.

- File System Error (-1073741819): How to Fix it

- 0xc0000417 Unknown Software Exception: How to Fix it

- Security Settings Blocked Self-signed Application [Fix]

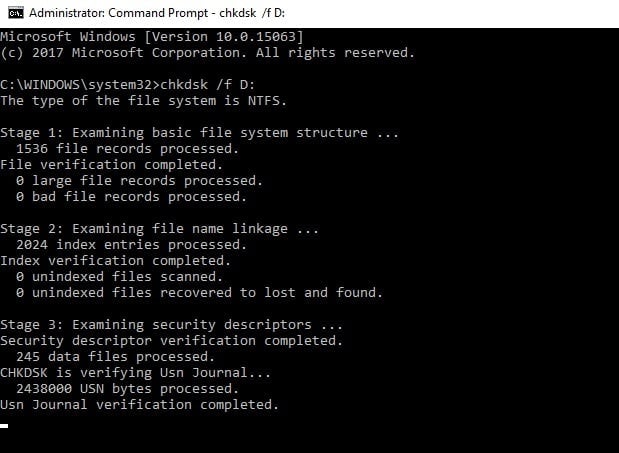

4. Run the chkdsk command

- Press Windows + R, type cmd, and hit Ctrl + Shift + Enter.

- Input the command below and hit Enter (Replace X with the appropriate letter of your partition).

chkdsk /f X - Wait for chkdsk to repair your files.

The chkdsk command helps you detect and repair various disk issues, including corrupted files and folders, which may cause errors.

Look at this guide if you’re having trouble accessing Command Prompt as an admin.

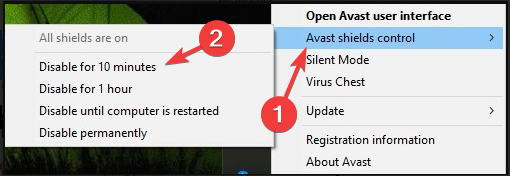

5. Disable your antivirus

- Click on the hidden access arrow in the Taskbar.

- Click on your antivirus icon, hover over Avast shields Control and select Disable for 10 minutes (This step varies for different antivirus software).

Sometimes, your antivirus may prevent you from installing new or upgrading software on your PC.

Temporarily disable your antivirus and then try to complete the installation process. Don’t forget to enable your antivirus after you finish installing the package.

If you’ve come across other solutions to fix The system cannot open the file error, feel free to list them in the comments section below.

Windows Installer also secures new accounts, files, folders, and registry keys by specifying a security descriptor that denies or allows permissions.