What did you do?

Create the following prometheus.yaml file:

apiVersion: v1 kind: Namespace metadata: name: openfaas labels: role: openfaas-system access: openfaas-system istio-injection: enabled --- apiVersion: v1 kind: Namespace metadata: name: openfaas-fn labels: istio-injection: enabled role: openfaas-fn --- # Source: openfaas/templates/psp.yaml apiVersion: policy/v1beta1 kind: PodSecurityPolicy metadata: name: openfaas-psp labels: app: openfaas chart: openfaas-6.0.4 heritage: Helm release: openfaas annotations: seccomp.security.alpha.kubernetes.io/allowedProfileNames: '*' spec: privileged: false hostIPC: false hostNetwork: false hostPID: false readOnlyRootFilesystem: false allowPrivilegeEscalation: false allowedCapabilities: - NET_ADMIN - NET_RAW fsGroup: rule: RunAsAny hostPorts: - max: 65535 min: 1 runAsUser: rule: RunAsAny seLinux: rule: RunAsAny supplementalGroups: rule: RunAsAny volumes: - '*' --- # Source: openfaas/templates/prometheus-rbac.yaml apiVersion: v1 kind: ServiceAccount metadata: name: openfaas-prometheus namespace: "openfaas" labels: app: openfaas chart: openfaas-6.0.4 component: prometheus heritage: Helm release: openfaas --- # Source: openfaas/templates/alertmanager-cfg.yaml kind: ConfigMap apiVersion: v1 metadata: labels: app: openfaas chart: openfaas-6.0.4 component: alertmanager-config heritage: Helm release: openfaas name: alertmanager-config namespace: "openfaas" data: alertmanager.yml: | route: group_by: ['alertname', 'cluster', 'service'] group_wait: 5s group_interval: 10s repeat_interval: 30s receiver: scale-up routes: - match: service: gateway receiver: scale-up severity: major inhibit_rules: - source_match: severity: 'critical' target_match: severity: 'warning' equal: ['alertname', 'cluster', 'service'] receivers: - name: 'scale-up' webhook_configs: - url: http://gateway.openfaas:8080/system/alert send_resolved: true http_config: basic_auth: username: admin password_file: /var/secrets/basic-auth-password --- # Source: openfaas/templates/prometheus-cfg.yaml kind: ConfigMap apiVersion: v1 metadata: labels: app: openfaas chart: openfaas-6.0.4 component: prometheus-config heritage: Helm release: openfaas name: prometheus-config namespace: "openfaas" data: prometheus.yml: | global: scrape_interval: 15s evaluation_interval: 15s external_labels: monitor: 'faas-monitor' rule_files: - 'alert.rules.yml' scrape_configs: - job_name: 'prometheus' scrape_interval: 5s static_configs: - targets: ['localhost:9090'] - job_name: 'kubernetes-pods' scrape_interval: 5s honor_labels: false kubernetes_sd_configs: - role: pod namespaces: names: - openfaas - openfaas-fn relabel_configs: - action: labelmap regex: __meta_kubernetes_pod_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_pod_name] action: replace target_label: kubernetes_pod_name - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape] action: keep regex: true - source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port] action: replace regex: ([^:]+)(?::\d+)?;(\d+) replacement: $1:$2 target_label: __address__ alerting: alertmanagers: - static_configs: - targets: - alertmanager:9093 alert.rules.yml: | groups: - name: openfaas rules: - alert: service_down expr: up == 0 - alert: APIHighInvocationRate expr: sum(rate(gateway_function_invocation_total{code="200"}[10s])) BY (function_name) > 5 for: 5s labels: service: gateway severity: major annotations: description: High invocation total on "{{$labels.function_name}}" summary: High invocation total on "{{$labels.function_name}}" --- # Source: openfaas/templates/prometheus-rbac.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: openfaas-prometheus labels: app: openfaas chart: openfaas-6.0.4 component: prometheus heritage: Helm release: openfaas rules: - apiGroups: [""] resources: - services - endpoints - pods verbs: ["get", "list", "watch"] --- # Source: openfaas/templates/psp.yaml kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: openfaas-psp labels: app: openfaas chart: openfaas-6.0.4 heritage: Helm release: openfaas rules: - apiGroups: ['policy'] resources: ['podsecuritypolicies'] verbs: ['use'] resourceNames: - openfaas-psp --- # Source: openfaas/templates/prometheus-rbac.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: openfaas-prometheus namespace: "openfaas" labels: app: openfaas chart: openfaas-6.0.4 component: prometheus heritage: Helm release: openfaas roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: openfaas-prometheus subjects: - kind: ServiceAccount name: openfaas-prometheus namespace: "openfaas" --- # Source: openfaas/templates/prometheus-rbac.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: openfaas-prometheus namespace: "openfaas-fn" labels: app: openfaas chart: openfaas-6.0.4 component: prometheus heritage: Helm release: openfaas roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: openfaas-prometheus subjects: - kind: ServiceAccount name: openfaas-prometheus namespace: "openfaas" --- # Source: openfaas/templates/psp.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: openfaas-psp namespace: "openfaas" roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: openfaas-psp subjects: # bind the PSP cluster role to all service accounts in the OF namespace - apiGroup: rbac.authorization.k8s.io kind: Group name: system:serviceaccounts:openfaas namespace: openfaas --- # Source: openfaas/templates/alertmanager-svc.yaml apiVersion: v1 kind: Service metadata: labels: app: openfaas chart: openfaas-6.0.4 component: alertmanager heritage: Helm release: openfaas name: alertmanager namespace: "openfaas" spec: type: ClusterIP ports: - port: 9093 protocol: TCP selector: app: alertmanager --- # Source: openfaas/templates/prometheus-svc.yaml apiVersion: v1 kind: Service metadata: labels: app: openfaas chart: openfaas-6.0.4 component: prometheus heritage: Helm release: openfaas name: prometheus namespace: "openfaas" spec: type: ClusterIP ports: - port: 9090 protocol: TCP selector: app: prometheus --- # Source: openfaas/templates/alertmanager-dep.yaml apiVersion: apps/v1 kind: Deployment metadata: labels: app: openfaas chart: openfaas-6.0.4 component: alertmanager heritage: Helm release: openfaas name: alertmanager namespace: "openfaas" spec: replicas: 1 selector: matchLabels: app: alertmanager template: metadata: labels: app: alertmanager annotations: sidecar.istio.io/inject: "true" checksum/alertmanager-config: "d11068803eeb977273e116dc044c25fb39b03295446b1251b7f1fbc26104ed04" spec: containers: - name: alertmanager image: prom/alertmanager:v0.18.0 imagePullPolicy: Always command: - "alertmanager" - "--config.file=/alertmanager.yml" - "--storage.path=/alertmanager" - "--cluster.listen-address=" livenessProbe: httpGet: path: /-/ready port: 9093 timeoutSeconds: 30 readinessProbe: httpGet: path: /-/ready port: 9093 timeoutSeconds: 30 ports: - containerPort: 9093 protocol: TCP volumeMounts: - mountPath: /alertmanager.yml name: alertmanager-config subPath: alertmanager.yml - name: auth readOnly: true mountPath: "/var/secrets" volumes: - name: alertmanager-config configMap: name: alertmanager-config items: - key: alertmanager.yml path: alertmanager.yml mode: 0644 - name: auth secret: secretName: basic-auth --- # Source: openfaas/templates/prometheus-dep.yaml apiVersion: apps/v1 kind: Deployment metadata: labels: app: openfaas chart: openfaas-6.0.4 component: prometheus heritage: Helm release: openfaas name: prometheus namespace: "openfaas" spec: replicas: 1 selector: matchLabels: app: prometheus template: metadata: labels: app: prometheus annotations: sidecar.istio.io/inject: "true" checksum/prometheus-config: "833784fe79824d5a9adfaf93561abc4dabbf746669235c5ac020e85a9a563510" spec: serviceAccountName: openfaas-prometheus containers: - name: prometheus image: prom/prometheus:v2.11.0 command: - "prometheus" - "--config.file=/etc/prometheus/prometheus.yml" imagePullPolicy: Always livenessProbe: httpGet: path: /-/healthy port: 9090 timeoutSeconds: 30 readinessProbe: httpGet: path: /-/healthy port: 9090 timeoutSeconds: 30 ports: - containerPort: 9090 protocol: TCP volumeMounts: - mountPath: /etc/prometheus/prometheus.yml name: prometheus-config subPath: prometheus.yml - mountPath: /etc/prometheus/alert.rules.yml name: prometheus-config subPath: alert.rules.yml - mountPath: /prometheus/data name: prom-data volumes: - name: prometheus-config configMap: name: prometheus-config items: - key: prometheus.yml path: prometheus.yml mode: 0644 - key: alert.rules.yml path: alert.rules.yml mode: 0644 - name: prom-data emptyDir: {} --- # Source: openfaas/templates/istio-mtls.yaml # enforce mTLS to openfaas control plane apiVersion: networking.istio.io/v1alpha3 kind: DestinationRule metadata: name: default namespace: openfaas spec: host: "*.openfaas.svc.cluster.local" trafficPolicy: tls: mode: ISTIO_MUTUAL --- # Source: openfaas/templates/istio-mtls.yaml # enforce mTLS to functions apiVersion: networking.istio.io/v1alpha3 kind: DestinationRule metadata: name: default namespace: "openfaas-fn" spec: host: "*.openfaas-fn.svc.cluster.local" trafficPolicy: tls: mode: ISTIO_MUTUAL --- # Source: openfaas/templates/secret.yaml apiVersion: v1 kind: Secret metadata: name: basic-auth namespace: "openfaas" labels: app: openfaas chart: openfaas-6.0.4 component: gateway heritage: Helm release: openfaas annotations: "helm.sh/hook": "pre-install" data: basic-auth-user: "YWRtaW4=" # kubectl -n openfaas get secret basic-auth -o jsonpath="{.data.basic-auth-password}" | base64 --decode basic-auth-password: "dEtuRzQ5cVNqYzFP"

Run kubectl apply -f ./prometheus.yaml and wait for Prometheus pod to come online.

What did you expect to see?

No error message in the logs of the Prometheus pod.

What did you see instead? Under which circumstances?

Connection refused error in logs of the Prometheus pod.

Notice errors related to connection refused

Environment

- System information:

Linux 4.15.0-1096-azure x86_64

- Prometheus version:

prometheus, version 2.11.0 (branch: HEAD, revision: 4ef66003d9855ed2b7a41e987b33828ec36db34d)

build user: root@0dc27cf95f36

build date: 20190709-09:54:35

go version: go1.12.7

- Alertmanager version:

alertmanager, version 0.18.0 (branch: HEAD, revision: 1ace0f76b7101cccc149d7298022df36039858ca)

build user: root@868685ed3ed0

build date: 20190708-14:31:49

go version: go1.12.6

- Prometheus configuration file:

prometheus.yml

global: scrape_interval: 15s evaluation_interval: 15s external_labels: monitor: 'faas-monitor' rule_files: - 'alert.rules.yml' scrape_configs: - job_name: 'prometheus' scrape_interval: 5s static_configs: - targets: ['localhost:9090'] - job_name: 'kubernetes-pods' scrape_interval: 5s honor_labels: false kubernetes_sd_configs: - role: pod namespaces: names: - openfaas - openfaas-fn relabel_configs: - action: labelmap regex: __meta_kubernetes_pod_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_pod_name] action: replace target_label: kubernetes_pod_name - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape] action: keep regex: true - source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port] action: replace regex: ([^:]+)(?::\d+)?;(\d+) replacement: $1:$2 target_label: __address__ alerting: alertmanagers: - static_configs: - targets: - alertmanager:9093

alert.rules.yml

groups: - name: openfaas rules: - alert: service_down expr: up == 0 - alert: APIHighInvocationRate expr: sum(rate(gateway_function_invocation_total{code="200"}[10s])) BY (function_name) > 5 for: 5s labels: service: gateway severity: major annotations: description: High invocation total on "{{$labels.function_name}}" summary: High invocation total on "{{$labels.function_name}}"

- Alertmanager configuration file:

route: group_by: ['alertname', 'cluster', 'service'] group_wait: 5s group_interval: 10s repeat_interval: 30s receiver: scale-up routes: - match: service: gateway receiver: scale-up severity: major inhibit_rules: - source_match: severity: 'critical' target_match: severity: 'warning' equal: ['alertname', 'cluster', 'service'] receivers: - name: 'scale-up' webhook_configs: - url: http://gateway.openfaas:8080/system/alert send_resolved: true http_config: basic_auth: username: admin password_file: /var/secrets/basic-auth-password

- Logs:

level=info ts=2020-11-09T19:33:49.233Z caller=main.go:293 msg="no time or size retention was set so using the default time retention" duration=15d

level=info ts=2020-11-09T19:33:49.233Z caller=main.go:329 msg="Starting Prometheus" version="(version=2.11.0, branch=HEAD, revision=4ef66003d9855ed2b7a41e987b33828ec36db34d)"

level=info ts=2020-11-09T19:33:49.233Z caller=main.go:330 build_context="(go=go1.12.7, user=root@0dc27cf95f36, date=20190709-09:54:35)"

level=info ts=2020-11-09T19:33:49.233Z caller=main.go:331 host_details="(Linux 4.15.0-1096-azure #106~16.04.1-Ubuntu SMP Thu Sep 10 18:51:54 UTC 2020 x86_64 prometheus-64d5877fc9-s5nwq (none))"

level=info ts=2020-11-09T19:33:49.234Z caller=main.go:332 fd_limits="(soft=1048576, hard=1048576)"

level=info ts=2020-11-09T19:33:49.234Z caller=main.go:333 vm_limits="(soft=unlimited, hard=unlimited)"

level=info ts=2020-11-09T19:33:49.432Z caller=main.go:652 msg="Starting TSDB ..."

level=info ts=2020-11-09T19:33:49.432Z caller=web.go:448 component=web msg="Start listening for connections" address=0.0.0.0:9090

level=info ts=2020-11-09T19:33:49.935Z caller=main.go:667 fs_type=EXT4_SUPER_MAGIC

level=info ts=2020-11-09T19:33:50.032Z caller=main.go:668 msg="TSDB started"

level=info ts=2020-11-09T19:33:50.032Z caller=main.go:738 msg="Loading configuration file" filename=/etc/prometheus/prometheus.yml

level=info ts=2020-11-09T19:33:50.132Z caller=kubernetes.go:192 component="discovery manager scrape" discovery=k8s msg="Using pod service account via in-cluster config"

level=error ts=2020-11-09T19:33:50.333Z caller=klog.go:94 component=k8s_client_runtime func=ErrorDepth msg="/app/discovery/kubernetes/kubernetes.go:283: Failed to list *v1.Pod: Get https://10.10.10.1:443/api/v1/namespaces/openfaas/pods?limit=500&resourceVersion=0: dial tcp 10.10.10.1:443: connect: connection refused"

level=info ts=2020-11-09T19:33:50.433Z caller=main.go:766 msg="Completed loading of configuration file" filename=/etc/prometheus/prometheus.yml

level=info ts=2020-11-09T19:33:50.433Z caller=main.go:621 msg="Server is ready to receive web requests."

level=error ts=2020-11-09T19:33:50.532Z caller=klog.go:94 component=k8s_client_runtime func=ErrorDepth msg="/app/discovery/kubernetes/kubernetes.go:283: Failed to list *v1.Pod: Get https://10.10.10.1:443/api/v1/namespaces/openfaas-fn/pods?limit=500&resourceVersion=0: dial tcp 10.10.10.1:443: connect: connection refused"

level=error ts=2020-11-09T19:33:51.334Z caller=klog.go:94 component=k8s_client_runtime func=ErrorDepth msg="/app/discovery/kubernetes/kubernetes.go:283: Failed to list *v1.Pod: Get https://10.10.10.1:443/api/v1/namespaces/openfaas/pods?limit=500&resourceVersion=0: dial tcp 10.10.10.1:443: connect: connection refused"

level=error ts=2020-11-09T19:33:51.533Z caller=klog.go:94 component=k8s_client_runtime func=ErrorDepth msg="/app/discovery/kubernetes/kubernetes.go:283: Failed to list *v1.Pod: Get https://10.10.10.1:443/api/v1/namespaces/openfaas-fn/pods?limit=500&resourceVersion=0: dial tcp 10.10.10.1:443: connect: connection refused"

NOTE

This solution is not for docker swarm. It for standalone containers (multi-container) aimed to be run on overlay network.

The same error we get when using overlay network and here is the solution (statically NOT dynamically)

this config does not work:

global:

scrape_interval: 15s

evaluation_interval: 15s

external_labels:

monitor: 'promswarm'

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: 'node'

static_configs:

- targets: [ 'localhost:9100' ]

Nor does this one even when http://docker.for.mac.localhost:9100/ is available, yet prometheus cannot find node-exporter. So the below one did not work either:

global:

scrape_interval: 15s

evaluation_interval: 15s

external_labels:

monitor: 'promswarm'

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: 'node'

static_configs:

- targets: [ 'docker.for.mac.localhost:9100' ]

But simply using its container ID we can have access to that service via its port number.

docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

a58264faa1a4 prom/prometheus "/bin/prometheus --c…" 5 minutes ago Up 5 minutes 0.0.0.0:9090->9090/tcp, :::9090->9090/tcp unruffled_solomon

62310f56f64a grafana/grafana:latest "/run.sh" 42 minutes ago Up 42 minutes 0.0.0.0:3000->3000/tcp, :::3000->3000/tcp wonderful_goldberg

7f1da9796af3 prom/node-exporter "/bin/node_exporter …" 48 minutes ago Up 48 minutes 0.0.0.0:9100->9100/tcp, :::9100->9100/tcp intelligent_panini

So we have 7f1da9796af3 prom/node-exporter ID and we can update our yml file to:

global:

scrape_interval: 15s

evaluation_interval: 15s

external_labels:

monitor: 'promswarm'

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: 'node'

static_configs:

- targets: [ '7f1da9796af3:9100' ]

not working

working

UPDATE

I myself was not happy with this hard-coded solution , so after some other search found a more reliable approach using --network-alias NAME which within the overlay network , that container will be route-able by that name. So the yml looks like this:

scrape_configs:

- job_name: 'prometheus'

static_configs:

- targets: ['localhost:9090']

- job_name: 'node'

static_configs:

- targets: [ 'node_exporter:9100' ]

In which the name node_exporter is an alias which has been created with run subcommand. e.g.

docker run --rm -d -v "/:/host:ro,rslave" --network cloud --network-alias node_exporter --pid host -p 9100:9100 prom/node-exporter --path.rootfs=/host

And in a nutshell it says on the overlay cloud network you can reach node-exporter using node_exporter:<PORT>.

Problem

You are trying to set up http endpoint monitoring with prometheus and blackbox_exporter by specifying something like the following:

...

job_name: 'blackbox'

metrics_path: /probe

params:

module: [http_2xx]

static_configs:

- targets:

- 'localhost:8080'

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: 127.0.0.1:9115

...

but you are getting the error (in prometheus targets) that connection was refused.

Solution

First specify the ip protocol as ip4 if you are not using ip6 like:

modules:

http_2xx:

prober: http

timeout: 5s

http:

preferred_ip_protocol: "ip4"

valid_status_codes: [200]

method: GET

And then make sure that you use the container IP address in the replacement field if you have started blackbox_exporter as a docker container (tip taken from here), which you can find by looking for the container IP address (docker inspect blackbox_exporter | grep IPAddress).

So your prometheus configuration should look like the following (different replacement IP):

job_name: 'blackbox'

metrics_path: /probe

params:

module: [http_2xx]

static_configs:

- targets:

- 'localhost:8080'

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: 172.17.0.3:9115

I have prometheus , nginx-vts , php and nginx-vts-exporter all in separated containers i’m trying to get metrics to prometheus from the exporter ,, it keeps telling me

Get http://127.0.0.1:9913/metrics: dial tcp 127.0.0.1:9913: connect: connection refused

this is the error

this is my prometheus.yml

global:

scrape_interval: 15s

evaluation_interval: 15s

scrape_configs:

-

job_name: nginx

static_configs:

- targets: [‘127.0.0.1:9913’]

this is an error from the exporter when i run the compose file

fetchHTTP failed Get http://localhost/status/format/json: net/http: request canceled while waiting for connection (Client.Timeout exceeded while awaiting headers)

asked Mar 1, 2019 at 14:47

Tyr_90Tyr_90

211 gold badge1 silver badge3 bronze badges

1

I had the same error, the solution for me was to add this --net=host flag when running the docker of the prometheus(not push-gateway). Then everything just clicked

So it looked something like:

docker run

-p 9090:9090

—net=host

prom/prometheus

For good measure this was what I was scraping with(Prometheus config):

— job_name: prometheus-local-discovery

honor_timestamps: true

scrape_interval: 30s

scrape_timeout: 29s

metrics_path: /metrics

scheme: http

honor_labels: true

static_configs:

— targets:

— localhost:9091

(Please note that the .yml config file is «tab-sensitive» and I was not able to format it better in here.)

answered Oct 24, 2022 at 14:54

When we run Prometheus with Node exporter or any other metrics collecting container, we have to add this in the Prometheus configuration file.

Here, while defining «targets» under «scrape_configs» mention the IP address of the host machine where node exporter is running.

Use «[‘localhost:9090’]» for prometheus and «[‘host_IP:9100’]» for node exporter or any other metrics collecting container.

answered Jul 23, 2020 at 8:25

You must log in to answer this question.

Not the answer you’re looking for? Browse other questions tagged

.

Not the answer you’re looking for? Browse other questions tagged

.

The solution of Connect: Connection Refused in Prometheus

tags: # Prometheus BugSolve centos docker node.js

1 Determine whether the node component is turned on

For example, whether node_export components are turned on

See if it runs, check through the port: lsof -i: 9100

lsof -i:9100

2 View whether the corresponding port is occupied

lsof -I: port number

3 Determine whether the current service is installed with node

becauseThe main role is to help Prometheus collect dataIf there is no node, it will not collect data, and naturally it will report an error

Intelligent Recommendation

ftp: connect: Connection Refused

Win10 displayed when the command line is connected FTP «ftp: connect: connection refused» command line input I is Show «ftp: connect: Connection refused» if you do not use the time…

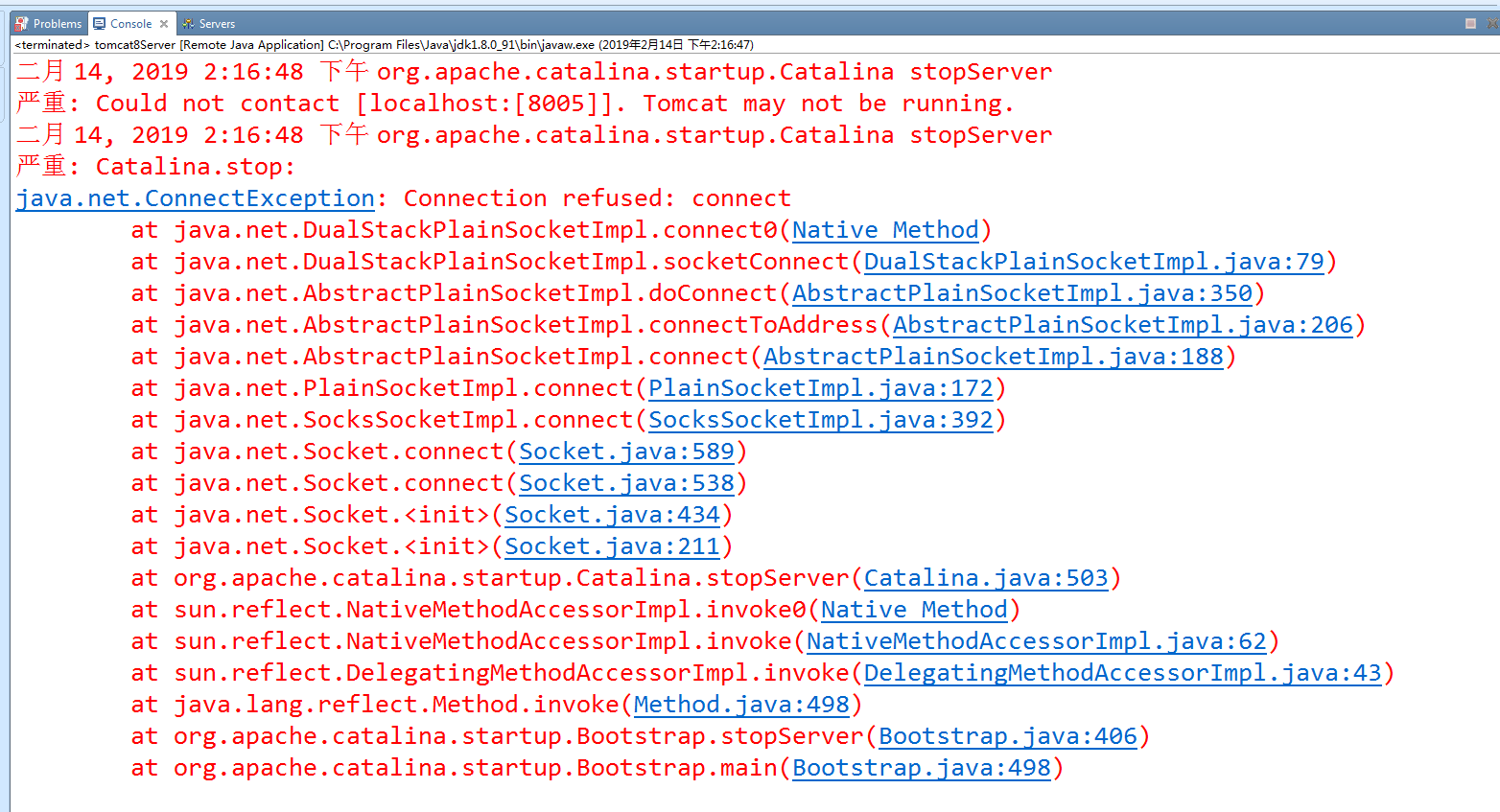

Connection refused: connect

It is normal when starting one, starting two, and one will not start. The online modification of the hosts file has been useless. I do n’t know why my copy is used. In this way, after modifying …

Connection refused connect

Literal meaning: The server cannot connect, the reason I found isPort 8080 is occupied by other processes We need to kill the process that occupies the port. In addition, I …

Error Connection refused: connect

The content is as follows: Start tomcat several times, resulting in the use of port 8080, find that port number, and then kill the corresponding process. Using the command prompt: Enter …

More Recommendation

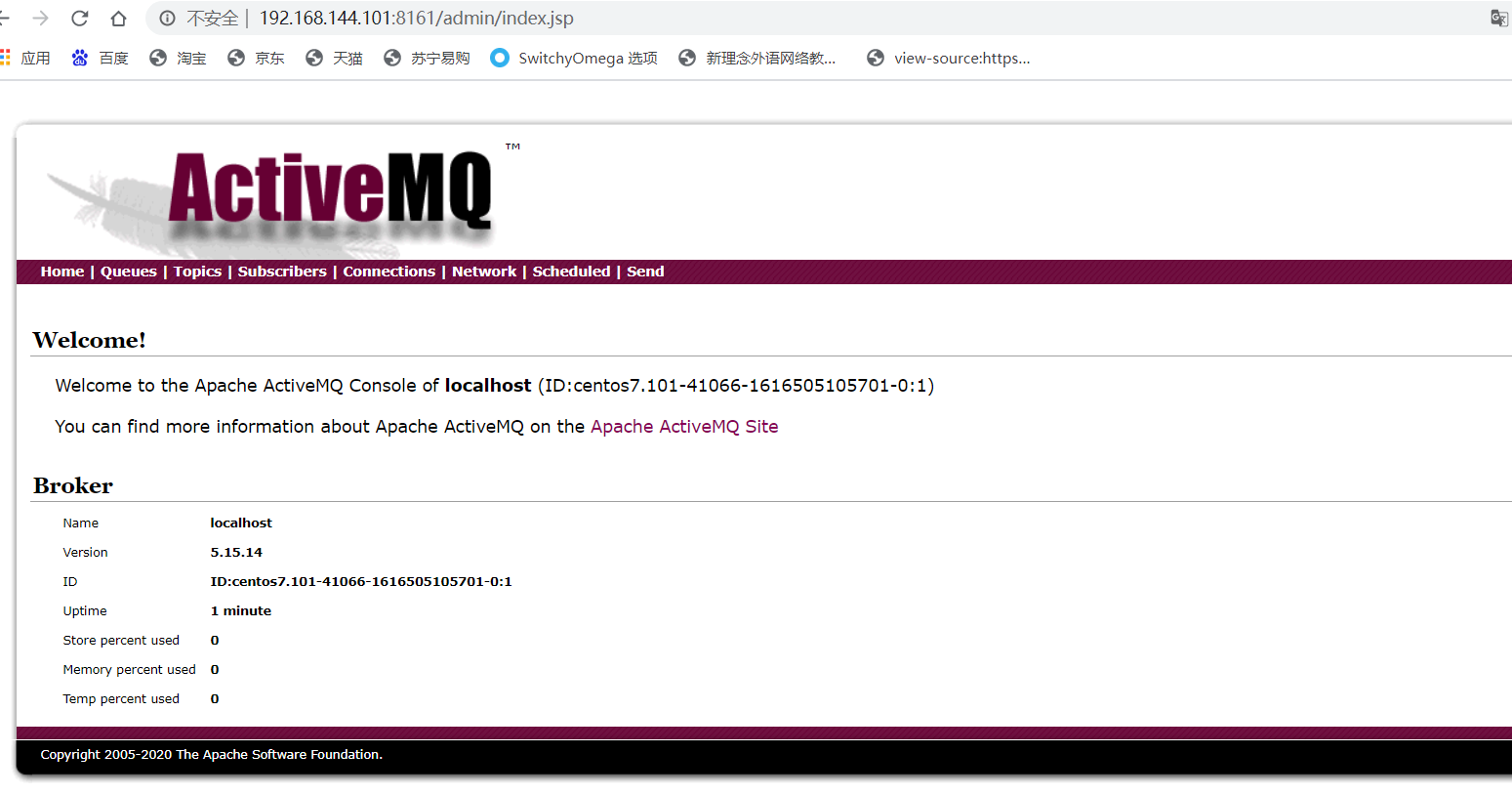

activemq Connection refused: connect

Scenes Has closed firewall Hosts can access virtual machines problem ActiveMQ with the Java link virtual machine is not connected Wrong Connection refused: connect solve Edit Profile ActiveMq.xml…

8761 Connection refused: connect

Ignore the exception caused by the configuration of Eureka.Client.Service-Url.defaultzone, and the re-overwriting configuration is good….